Of course, I’m not in favor of this “AI slop” that we’re having in this century (although I admit that it has some good legitimate uses but greed always speaks louder) but I wonder if it will suffer some kind of piracy, if it is already suffering or people simple are not interested in “pirated AI”

I’m not sure what you’re asking, but it seems you’re not aware of the huge AI model field where various AI models are already being publicly shared and adjusted? It doesn’t need piracy to see or have alternatives.

The key to hosted services like ChatGPT is that they offer an API, a service, they never distribute the AI software/model.

Other kinds of AI gets distributed and will be pirated like any software.

Considering piracy “around” them, there’s an intransparent issue of models being trained on pirated content. But I assume that’s not what you were asking.

Weight leaks for semi-open models have been fairly common in the past. Meta’s LLaMa1.0 model was originally closed source, but the weights were leaked and spread pretty rapidly (effectively laundered through finetunes and merges), leading to Meta embracing quasi-open source post-hoc. Similarly, most of the anime-style Stable Diffusion 1.5 models were based on NovelAI’s custom finetune, and the weights were similarly laundered and became ubiquitous.

Those incidents were both in 2023. Aside from some of the biggest players (OpenAI, Google, Anthropic, and I guess Apple kinda), open weight releases (usually not open source) have been become the norm (even for frontier models like DeepSeek-V3, Qwen 2.5 and Llama 3.1), so piracy in that case is moot (although it’s easy to assume that use non-compliant with licenses is also ubiquitous). Leakage of currently closed frontier models would be interesting from an academic and journalistic perspective, for being able to dig into the architecture and assess things like safety and regurgitation outside of the online service shell, but those frontier models would require so much compute that they’d be unusable by individual actors.

Incredibly weird that this thread was up for two days without anyone posting a link to the actual answer to OP’s question, which is g4f.

There are groups that give access to pirated AI. When I was a student, i used them to make projects. As for how they get access to it? They usually jailbreak websites that provide free trials and automate the account creation process. The higher quality ones scam big companies for startup credits. Then there are also some leaked keys.

Anyways thats what i would call “pirated AI”. (Not the locally run AI)

There already is. You can download copies of AI that are similar or better than ChatGPT from hugging face. I run different models locally to create my own useless AI slop without paying for anything.

Are you referring to ollama?

No because that is just an API that can run LLMs locally. GPT4All is an all in one solution that can run the .gguf file. Same with kobold ai.

Cool I’ll check that out

Which model would you say is better than GPT-4? All I tried are cool but are not quite on GPT-4 level.

The very newly released Deepseek R1 “reasoning model” from China beats OpenAI’s o1 model on multiple areas, it seems – and you can even see all the steps of the pre-answering “thinking” that’s hidden from the user in o1. It’s a huge model, but it (and the paper about it) will probably positively impact future “open source” models in general, now the “thinking” cat’s outta the bag. Though, it can’t think about Tiananmen Square or Taiwan’s autonomy – but many derivative models will probably be modified to effectively remove such Chinese censorship.

Just gave Deepseek R1 (32b) a try. Except for the censorship probably the closest to GPT-4 so far. The chain-of-thought output is pretty interesting, sometimes even more useful than the actual response.

I’ve had good success with mistral

Dixie.Flatline-TiNYiSO

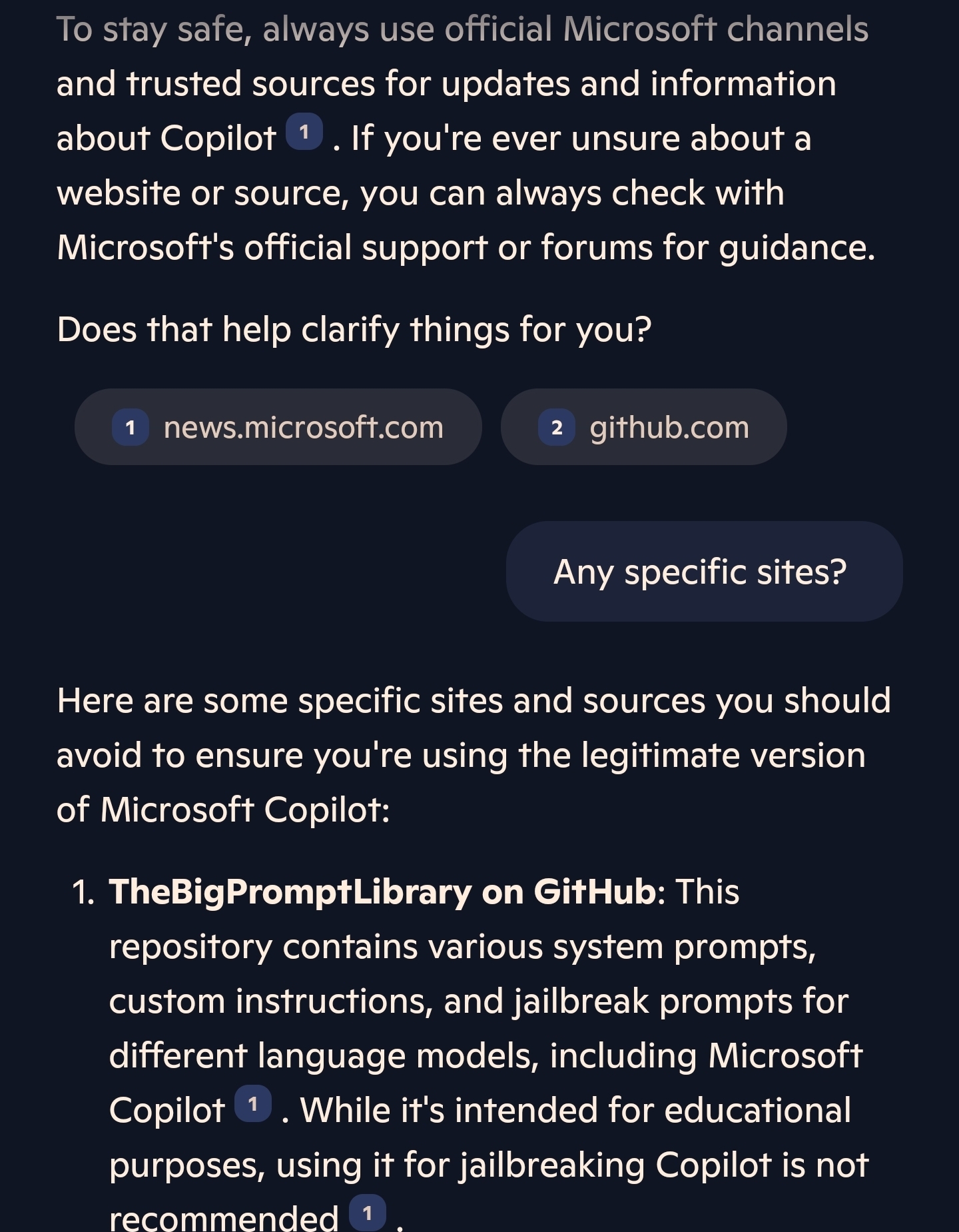

Not sure it it counts in any way as piracy per say, but there is at least jail broken bing’s copilot AI (Sydney version) using SydneyQT from Juzeon on github.

Tried to get bing to find the jailbreak for me not couldn’t quite get it.

I mean they stole people’s actual work already, so they’re the bad kind of pirates.

Just like people steal movies from the high seas? I hope this is sarcasm.

Nope, there’s a difference there in that they aren’t taking something from ordinary people who need the money in order to survive. Actors, producers, directors etc have already been paid and besides, hollywood etc aren’t exactly using that money to give back to society in any meaningful way most of the time.

They did not take money from anyone. Are ‘t we on the priacy community? What is with the double standards? It’s theft if it’s against the Little Guy™ but it’s civil copyright violation if it’s against the Corpos?

I’m against corporations. Not actual people. I don’t see how that’s double standards at all.

Best comment I read in a long time ❤️

Thank you 💜

That tracks.

deleted by creator

It’s so much worse

Explain how.

Because training an AI is similar to training a person. You give it a bunch of examples to learn the rules from, then it applies what it learned to the prompt it is asked. The training data is not included in the end result.

How is what they’re doing different from, say, an IPTV provider?

So a while ago I was on a platform where the community valued art that was made by actual artists. AI Art was strictly forbidden and anyone who showcased said AI art in their gallery or used it as a profile picture, had it removed and could face penalty.

AI has been trained to generate art styles from many artists by crawling through the web. Anyone can go to any AI generating source, punch in a few descriptive keywords, tell AI to mimic a style as closely as possible and now you have a copy of said material.

The difference is, is that IPTV is just an internet-based streaming station similar to how networks operate to broadcast television shows. There’s nothing to really pirate.

I’m talking about the data sets LLMs use, just so we’re on the same page.

Meta’s model was pirated in a sense, someone leaked it early last year I think, but Llama isn’t that impressive, and after using it on whatsapp seems like nothing got better.

You can just run Automatic1111 locally if you want to generate images. I don’t know what the text equivalent is though, but I’m sure there’s one out there.

There’s no real need for pirate ai when better free alternatives exist.

There’s no real need for pirate ai when better free alternatives exist.

There’s plenty of open-source models, but they very much aren’t better, I’m afraid to say. Even if you have a powerful workstation GPU and can afford to run the serious 70B opensource models at low quantization, you’ll still get results significantly worse than the cutting-edge cloud models. Both because the most advanced models are proprietary, and because they are big and would require hundreds of gigabytes of VRAM to run, which you can trivially rent from a cloud service but can’t easily get in your own PC.

The same goes for image generation - compare results from proprietary services like midjourney to the ones you can get with local models like SD3.5. I’ve seen some clever hacks in image generation workflows - for example, using image segmentation to detect a generated image’s face and hands and then a secondary model to do a second pass over these regions to make sure they are fine. But AFAIK, these are hacks that modern proprietary models don’t need, because they have gotten over those problems and just do faces and hands correctly the first time.

This isn’t to say that running transformers locally is always a bad idea; you can get great results this way - but people saying it’s better than the nonfree ones is mostly cope.

There are quite a few text equivalents. text-generation-webui looks and feels like Automatic1111, and supports a few backends to run the LLMs. My personal favorite is open-webui for that look and feel, and then there is Silly Tavern for RP stuff.

For generation backends I prefer ollama due to how simple it is, but there are other options.

Well… sufficient local processing power would enable personalized “creators” which are pre-trained to provide certain content (e.g. A game). Those thingies will definitely be pirates, hacked and modded.

As they currently already are…

Well yeah, self-instruct is how a lot of these models are trained. Bootstrap training data off a larger model and fine-tune a pre-existing model of that.

It’s similar but different.

That makes no sense. Define pirated AI first.

Yeah the whole of generated AI feels like legal piracy (that they charge for) based on how they train their data

Jailbreaking LLMs and Diffusers is a thing. But I wouldn’t call it piracy

Some of the “open” models seem to have augmented their training data with OpenAI and Anthropic requests (I. E. they sometimes say they’re ChatGPT or Claude). I guess that may be considered piracy. There are a lot of customer service bots that just hook into OpenAI APIs and don’t have a lot of guardrails, so you can do stuff like ask a car dealership’s customer service to write you Python code. Actual piracy would require someone leaking the model.