Background: 15 years of experience in software and apparently spoiled because it was already set up correctly.

Been practicing doing my own servers, published a test site and 24 hours later, root was compromised.

Rolled back to the backup before I made it public and now I have a security checklist.

Sorry to hear that. Do you know how it was compromised and how you found out?

I’ve always felt that if you’re exposing an SSH or any kind of management port to the internet, you can avoid a lot of issues with a VPN. I’ve always setup a VPN. It prevents having to open up very much at all and then you can open configured web portal ports and the occasional front end protocol where needed.

Exactly.

All of my services are ‘local’ to the VPN. Nothing happens on the LAN except for DHCP and WireGuard traffic.

Remote access is as simple as pressing the WireGuard button.

This sounds like something everyone should go through at least once, to underscore the importance of hardening that can be easily taken for granted

Basic setup for me is scripted on a new system. In regards to ssh, I make sure:

- Root account is disabled, sudo only

- ssh only by keys

- sshd blocks all users but a few, via AllowUsers

- All ‘default usernames’ are removed, like ec2-user or ubuntu for AWS ec2 systems

- The default ssh port moved if ssh has to be exposed to the Internet. No, this doesn’t make it “more secure” but damn, it reduces the script denials in my system logs, fight me.

- Services are only allowed connections by an allow list of IPs or subnets. Internal, when possible.

My systems are not “unhackable” but not low-hanging fruit, either. I assume everything I have out there can be hacked by someone SUPER determined, and have a vector of protection to mitigate backwash in case they gain full access.

- The default ssh port moved if ssh has to be exposed to the Internet. No, this doesn’t make it “more secure” but damn, it reduces the script denials in my system logs, fight me.

Gosh I get unreasonably frustrated when someone says yeah but that’s just security through obscurity. Like yeah, we all know what nmap is, a persistent threat will just look at all 65535 and figure out where ssh is listening… But if you change your threat model and talk about bots? Logs are much cleaner and moving ports gets rid of a lot of traffic. Obviously so does enabling keys only.

Also does anyone still port knock these days?

I use port knock. Really helps against scans if you are the edge device.

Also does anyone still port knock these days?

If they did, would we know?

Literally the only time I got somewhat hacked was when I left the default port of the service. Obscurity is reasonable, combined with other things like the ones mentioned here make you pretty much invulnerable to casuals. Somebody needs to target you to get anything.

Also does anyone still port knock these days?

Enter Masscan, probably a net negative for the internet, so use with care.

I didn’t see anything about port knocking there, it rather looks like it has the opposite focus - a quote from that page is “features that support widespread scanning of many machines are supported, while in-depth scanning of single machines aren’t.”

Sure yeah it’s a discovery tool OOTB, but I’ve used it to perform specific packet sequences as well.

Lol you can actually demo a github compromise in real time to an audience.

Make a repo with an API key, publish it, and literally just watch as it takes only a few minutes before a script logs in.

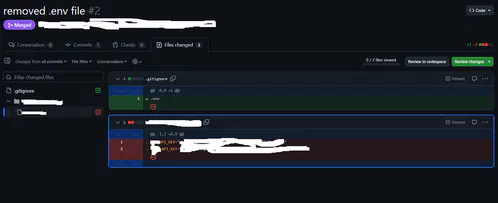

I search commits for “removed env file” to hopefully catch people who don’t know how git works.

You gremlin lmao

–verbose please?

edit: never mind, found it. So there’s dumbasses storing sensitive data (keys!) inside their git folder and unable to configure .gitignore…

My work is transferring to github from svn currently

My condolences

yeah, I just tried it there, people actually did it.

yeah, I just tried it there, people actually did it.I always start with .gitignore and adding the .env then making it.

Anywho, there’s git filter-repo which is quite nice and retconned some of my repos for some minor things out of existence :P

I searched for “added gitignore” and I found an etherum wallet with 25 cent.

Good on you learning new skills.

This is why other sysadmins and cybetsecurity exist. Be nice to them.

I usually just follow this

Had this years ago except it was a dumbass contractor where I worked who left a Windows server with FTP services exposed to the Internet and IIRC anonymous FTP enabled, on a Friday.

When I came in on Monday it had become a repository for warez, malware, and questionable porn. We wiped out rather than trying to recover anything.

questionable?

Yeah just like that. Ask more questions

Do not allow username/password login for ssh. Force certificate authentication only!

Do not allow username/password login for ssh

This is disabled by default for the root user.

$ man sshd_config ... PermitRootLogin Specifies whether root can log in using ssh(1). The argument must be yes, prohibit-password, forced-commands-only, or no. The default is prohibit-password. ...If it’s public facing, how about dont turn on ssh to the public, open it to select ips or ranges. Use a non standard port, use a cert or even a radius with TOTP like privacyIdea. How about a port knocker to open the non standard port as well. Autoban to lock out source ips.

That’s just off the top of my head.

There’s a lot you can do to harden a host.

dont turn on ssh to the public, open it to select ips or ranges

What if you don’t have a static IP, do you ask your ISP in what range their public addresses fall?

Sure. My ISP gave me this range for this exact reason.

Why though? If u have a strong password, it will take eternity to brute force

Use gnome powder to shrink, go behind the counter, kick his ass and get your money back.

This is like browsing /c/selfhosted as everyone portforwards every experimental piece of garbage across their router…

portforwards every experimental piece of garbage across their router…

Man some of those “It’s so E-Z bro” YouTubers are WAY too cavalier about doing this.

hey, thats me!

Meh. Each service in its isolated VM and subnet. Plus just generally a good firewall setup. Currently hosting ~10 services plubicly, never had any issue.

Well, if you actually do that, bully for you, that’s how that should be done if you have to expose services.

Everyone else there is probably DMZing their desktop from what I can tell.

Yeah the only thing forwarded past my router is my VPN. Assuming I did my job decently, without a valid private key it should be pretty difficult to compromise.

One time, I didn’t realize I had allowed all users to log in via ssh, and I had a user “steam” whose password was just “steam”.

“Hey, why is this Valheim server running like shit?”

“Wtf is

xrx?”“Oh, it looks like it’s mining crypto. Cool. Welp, gotta nuke this whole box now.”

So anyway, now I use NixOS.

Good point about a default deny approach to users and ssh, so random services don’t add insecure logins.

Weird. My last setup had a NAT with a few VMs hosting a few different services. For example, Jellyfin, a web server, and novnc/vm. That turned out perfectly fine and it was exposed to the web. You must have had a vulnerable version of whatever web host you were using, or maybe if you had SSH open without rate limits.

I’m having the opposite problem right now. Tightend a VM down so hard that now I can’t get into it.

I don’t think I’m ever opening up anything to the internet. It’s scary out there.

I don’t trust my competence, and if I did, I dont trust my attention to detail. That’s why I outsource my security: pihole+firebog for links, ISP for my firewall, and Tailscale for tunnels. I’m not claiming any of them are the best, but they’re all better than me.

Isp for firewalls might not be better than you. Get something dedicated.

Ubiquiti or pfsense is a good start.

You over estimate my competence. I do intend to leave my ISP firewall up and intact, but I could build layers behind it.

I run everything on a minipc (beelink eq12), which I intend to age into a network box (router, dns, firewall) when I outgrow it as a server. It’ll be a couple years and few more users yet though.