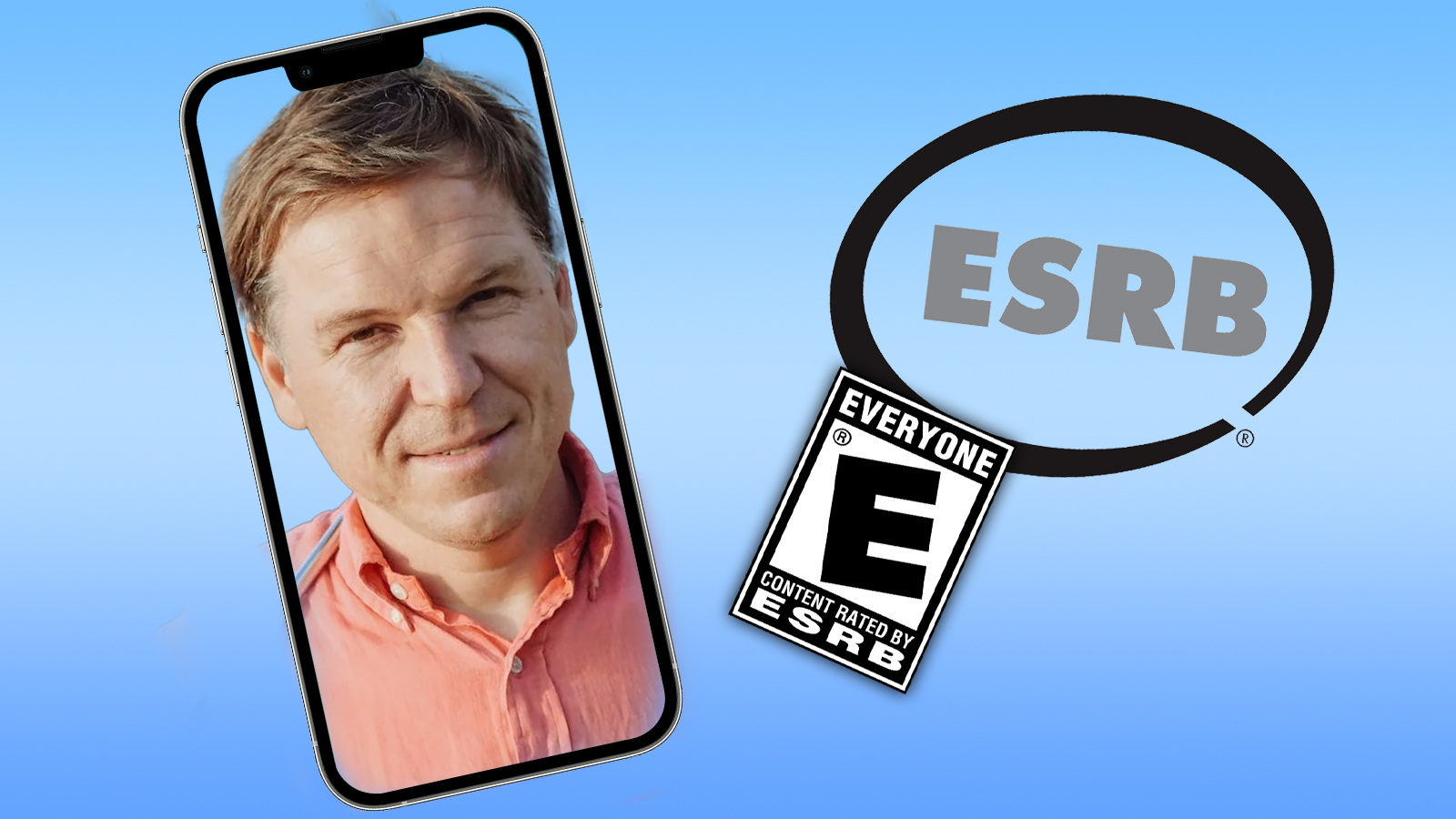

The ESRB has added:

“To be perfectly clear: Any images and data used for this process are never stored, used for AI training, used for marketing, or shared with anyone; the only piece of information that is communicated to the company requesting VPC is a “Yes” or “No” determination as to whether the person is over the age of 25.”

Sure, ok…

I don’t know what else to say about this, this will obviously turn into something else.

From the description, it sounds like you upload a picture, then show a face to a video camera. It’s not like they’re going through FaceID that has anti-spoofing hardware and software. If they’re supporting normal web cams, they can’t check for things like 3d markers

Based on applications that have rolled out for use cases like police identifying suspects, I would hazard a guess that

I’m betting this will turn out to be a massive waste of resources, but that never stopped something from being adopted. Even the cops had to be banned by several municipalities because they liked being able to identify and catch suspects, even if it was likely to be the wrong person. In one scenario I read about, researchers had to demonstrate that the software the PD was using identified several prominent local politicians as robbery and murder suspects.