- 377 Posts

- 307 Comments

3·1 day ago

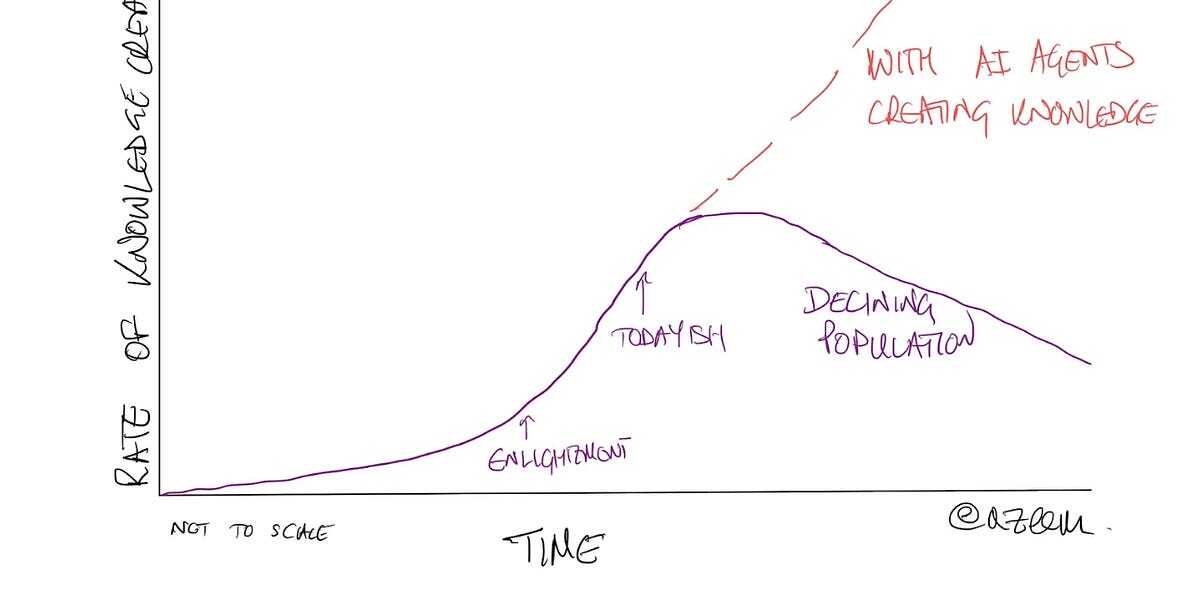

3·1 day agoIf you are familiar with the idea of AI taking off into the realms of superintelligence, one of the steps that is supposed to accompany that is recursive self-improvement. In other words when AI can write its own code, to improve itself, and thus continuously get more and more powerful. I wonder if examples like this are the tiny first baby steps of that.

2·1 day ago

2·1 day agoI’m surprised drone deliveries haven’t taken off more yet, these guys in Germany look like they are on to a winning solution too.

https://www.siliconrepublic.com/machines/wingcopter-drone-delivery-groceries-germany

78·1 day ago

78·1 day agoMicrosoft has cash reserves of $75 billion.

Microsoft - If you really want to convince us that nuclear power is part of the future, why can’t you use some of your own money? Why does every single nuclear suggestion always rely on bailouts from taxpayers? Here’s a thought, if you can’t pay for it yourself - just pick the cheaper option that taxpayers don’t have to pay for - you know renewables and grid storage? The stuff that everybody else, all over the world, is building near 99% of new electricity generation with.

5·1 day ago

5·1 day ago“INBRAIN’s BCI technology was able to differentiate between healthy and cancerous brain tissue with micrometer-scale precision.”

This breakthrough with the surgery is very interesting, but what is even more interesting to me is their wider goals. They wonder if it will be possible to use this approach to treat many brain disorders, including mental health conditions.

This also has the potential for a direct connection between artificial intelligence and our brains. That has long been speculated about in sci-fi. This approach has a chance of starting to make it a reality.

1·1 day ago

1·1 day agoWhen I look at the potential in current advances in medicine, and the idiocracy that passes for “politics” and “debate”, in some quarters, I wonder when more people are going to wise up.

Training and educating surgeons is the biggest bottleneck in the availability of their skills, and thus the amount of surgeries people can have. Here we have the potential to smash through that. Procedure by procedure, as robots master individual types of surgery, suddenly the only type of bottleneck you have is the amount of robots. A vastly easier and quicker problem to solve than increasing the supply of trained human surgeons.

2·2 days ago

2·2 days agoFor sure there is a certain amount of hype here. That said much of their thinking seems like it could be sound. But I want to see stuff like this working in practice, not just theoretically. I guess we will have to wait and see.

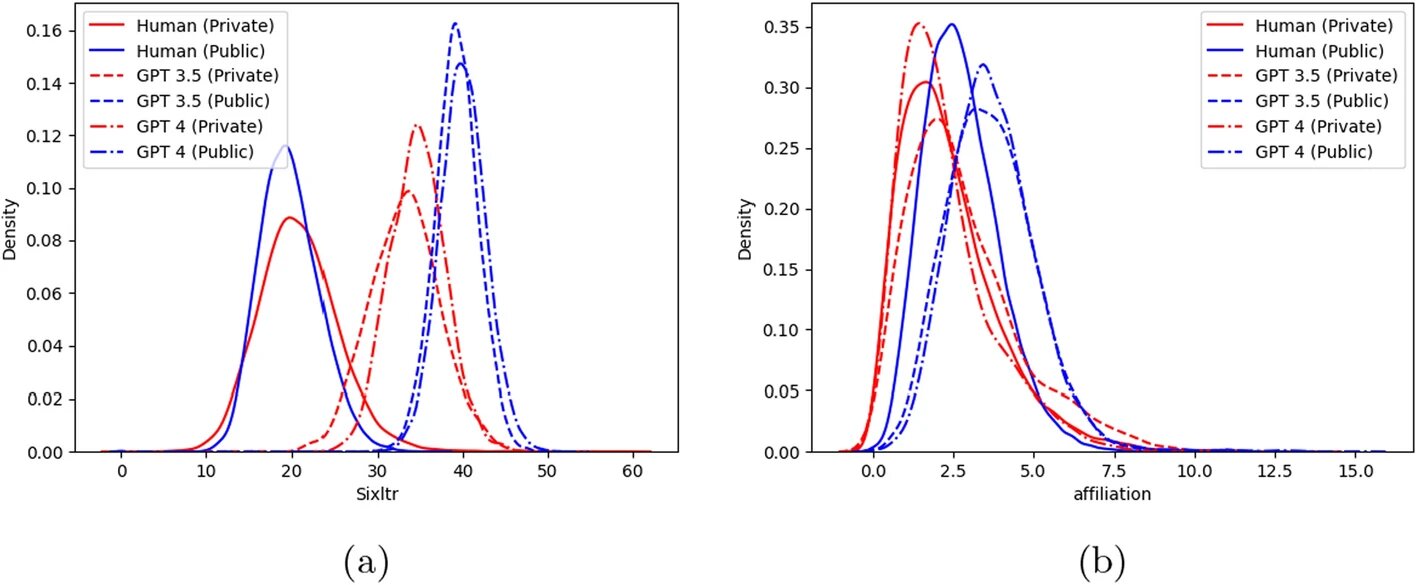

171·2 days ago

171·2 days agoDear ChatGPT - Please pretend I’m a rich, white man when you’re writing my college admissions essay for me, thanks, Signed - A Poor

11·2 days ago

11·2 days agoThis engine seems to work like an ion thruster but uses metal as fuel instead of xenon gas, which is easier to refine and can be found in asteroids all over the Solar System.

4·3 days ago

4·3 days agoOn the galactic scale its the second-nearest star to us out of billions.

3·3 days ago

3·3 days agoIt’s fascinating we are living through a time when exoplanets are first being found . This planet is closer to its Red Dwarf star then Mercury is to our far far hotter G-type star. Still, the surface temperature is only 25 degrees Celsius above boiling point.

3·3 days ago

3·3 days agoPop-up mobile parks can do the same. I love this example from London.

2·4 days ago

2·4 days agoIn most countries, people elect the local officials who make zoning decisions. It’s not a fundamental barrier.

3·4 days ago

3·4 days agoThey quote a cost of $1,000 per square meter (S100 sq foot). So I arrived at my calculation assuming a size of 100 sq m/1,000 sq feet for an average ‘starter home’ 2-bedroom dwelling.

The fact that housing crises are occurring in so many Western countries suggests to me that there is something very fundamental that is broken and wrong with our system of supplying housing - one of life’s most basic human necessities.

If the system is the problem, then the system can’t provide the solution, perhaps only radical new ways of doing things can?

Germans have a system of purchasing property called “Wohnungsgenossenschaften”. It is where individuals come together in a not-for-profit cooperative, to build and finance their own apartment buildings and housing complexes. This technology seems a perfect fit for that, maybe we would all be better off in other western countries if we adopted this system more?

1·4 days ago

1·4 days agoYes, sadly he was quite prescient. I often think we’re in a time of transition/decay because of tech like AI & robotics. Sadly perfect conditions for fascism, which the right has so often transmuted into throughout history.

9·6 days ago

9·6 days agoSome people may have doubts about these claims, but China leads the world in manufacturing. They’ve also blasted past all expectations when it comes to developing batteries, renewables and EVs. I wouldn’t bet against them.

43·6 days ago

43·6 days agoI wonder will we look back at stuff like this as the very beginnings of recursive self-improvement by artificial intelligence.

5·7 days ago

5·7 days agoCould be, but isn’t, which is where some regulations probably need to come in.

I assume also that the technological side of things is far from perfected, but that will improve over time.

211·8 days ago

211·8 days agoThere’s no reason it couldn’t be a closed system, where any fertilizer that doesn’t become part of the crop biomass is recycled. In theory it should be more sustainable than existing agriculture and use less fertilizer per kg of crop produced.

5·11 days ago

5·11 days agoActive euthanasia is legal in a few countries for terminally ill patients.

That doesn’t seem an accurate description of the situation. Yes, doctors and nurses sometimes ‘help people along’ in their final hours or even days, that is not the same thing as the euthanasia being described here.

In Europe and North America food loss adds up to around 16%

Everywhere in the world hunger and malnutrition are distribution problems, not lack of production problems.