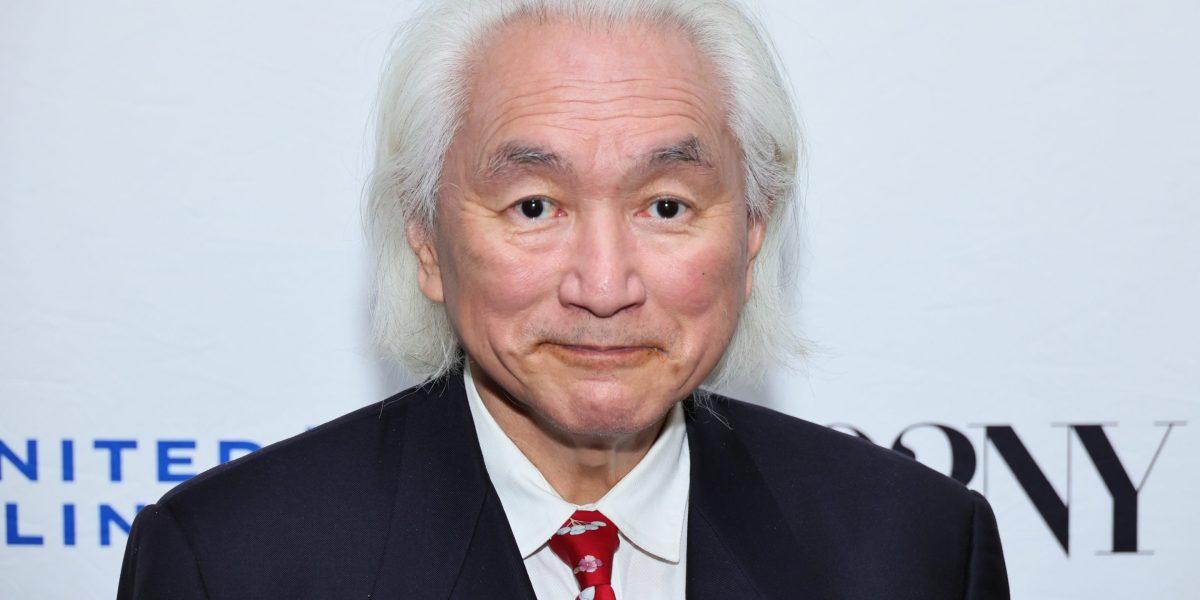

Top physicist says chatbots are just ‘glorified tape recorders’::Leading theoretical physicist Michio Kaku predicts quantum computers are far more important for solving mankind’s problems.

A physicist is not gonna know a lot more about language models than your average college grad.

That’s absolute nonsense. Physicists have to be excellent statisticians and, unlike data scientists, statisticians have to understand where the data is coming from, not just how to spit out simple summaries of enormously complex datasets as if it had any meaning without context.

And his views are exactly in line with pretty much every expert who doesn’t have a financial stake in hyping the high tech magic 8-ball. On the Dangers of Stochastic Parrots.

I had that paper in mind when I said that. Doesn’t exhibit a very thorough understanding of how these models actually work.

A common argument is that the human brain very well may work the exact same, ergo the common phrase, “I’m a stochastic parrot and so are you.”

That’s a Sam Altman line and all it shows is that he does not know how knowledge is acquired, developed, or applied. He has no concept of how the world actually works and has likely never thought deeply about anything in his life beyond how to grift profitably. And he can’t afford to examine his (professed) beliefs because he’s trying to cash out on a doomed fantasy before too many people realise it is doomed.

Okay but LLMs have multiplied my productivity far more than any tape recorder ever could or ever will. The statement is absolute nonsense.

Do you imagine that music did not exist before we had the means to record it? Or that it had no effect on the productivity of musicians?

Vinyl happened before tape but in the early days of computers, tape was what we used to save data and code. Kids TV programmes used to play computer tapes for you to record at home, distributing the code in an incredibly efficient way.

Kids TV programmes used to play computer tapes for you to record at home, distributing the code in an incredibly efficient way.

Could you expand on this? Sounds interesting.

They just played the tapes on TV, kinda screechy, computer-y sounds. They’d tell you when to press record on your cassette player before they started. You’d hold it close to the TV speakers until it finished playing, then plug the cassete player in to your computer, and there’d be some simple free game to play. I didn’t believe it would work but it did. I still don’t believe it worked. But it did.

There must be a clip somewhere on the internet but my search skills are nowhere near good enough to find one.

[New comment instead of editing the old so that you see it]

I managed to find a video of an old skool game loading. That’s what it sounded like when you loaded a program and it’s exactly what they’d play on the TV so you could create your tape.

Thank you very much for the effort! I also searched for text or video, but found none.

I understand now what you previously meant, streaming code via TV.

That’s what it sounded like when you loaded a program and it’s exactly what they’d play on the TV so you could create your tape.

Now I have a new confusion: Why would they let the speaker play the bits being processed? It surely was technically possible to load a program into memory without sending anything to the speaker. Or wasn’t it, and it was a technical necessity? Or was it an artistic choice?

I assume it was because they used ordinary tape recorders, that people would otherwise use as dictaphones or to play music. I guess there wasn’t a way to transfer the data silently because the technology was designed to play sound? We had to wait for the floppy disk for silent-ish loading. Ish because they click-clacked a lot, but that was moving parts rather than the code itself.

Your statement and the original one can both be in sync with another.

Microsoft Word is just a glorified notepad but it still improves my productivity significantly.

And everyone will have different uses depending on their needs. Chatgpt has done nothing for my productivity/usually adds work as I have to double check all the nonsensical crap it gives me for example and then correct it.

Those are all gross oversimplifications. By the same logic the internet is just a glorified telephone, the computer is a glorified abacus, the telephone is just a glorified messenger pigeon. There are lots of people who don’t understand LLMs and exaggerate its capabilities but dismissing it is also bad.

I think describing word processors as glorified notepads would also be extremely misleading, to the extent that I would describe that statement as incorrect.

Nope. Biologists also use statistical models and also know where the data is coming from etc etc. They are not experts in AI. This Michio Kaku guy is more like the African American Science Guy to me, more concerned with being a celeb.

Biologists are (often) excellent statisticians too, you’re correct. That’s why the most successful quants are biologists or physicists, despite not having trained in finance.

They’re not experts in (the badly misnamed) AI. They’re experts in the statistical models AI uses. They know an awful lot more than the likes of Sam Altman and the AI-hypers. Because they’re trained specialists, not techbro grifters.

I disagree, physics is the foundational science of all sciences. It is the science with the strongest emphasis on understanding math well enough to derive the equations that actually take form in the real world

Therefore, if you know physics, you know everything.

Yes. Glorified tape recorders that can provide assistance and instruction in certain domains that is very useful beyond what a simple tape recorder could ever provide.

Yes. Glorified tape recorders that can provide assistance and instruction in certain domains that is very useful beyond what a simple tape recorder could ever provide.

I think a good analogue is the invention of the typewriter or the digital calculator. Its not like its something that hadn’t been conceived of or that we didn’t have correlatives for. Is it revolutionary? Yes, the world will change (has changed) because of it. But the really big deal is that this puts a big bright signpost of how things will go far off into the future. The typewriter led to the digital typewriter. The digital typewriter showed the demand for personal business machines like the first apples.

Its not just about where were at (and to be clear, I am firmly in the ‘this changed the world camp’. I realize not everyone holds that view; but as a daily user/ builder, its my strong opinion that the world changed with the release of chatgpt, even if you can’t tell yet.), the broader point is about where we’re going.

The dismissiveness I’ve seen around this tech is frankly, hilarious. I get that its sporting to be a curmudgeon, but to dismiss this technology will be to have completely missed what will be one of the most influential human technologies to have been invented. Is this general intelligence? To keep pretending it has to be AGI or nothing is to miss the entire damn point. And this goal post shifting is how the frog gets slowly boiled.

I reckon it’s somewhere in between. I really don’t think it’s going to be the revolution they pitched, or some feared. It’s also not going to be completely dismissed.

I was very excited when I started to play with various AI tools, then about two weeks in I realized how limited they are and how they need a lot of human input and editing to produce a good output. There’s a ton of hype and it’s had little impact on the regular persons life.

Biggest application of AI I’ve seen to date? Making presidents talk about weed, etc.

I reckon it’s somewhere in between. I really don’t think it’s going to be the revolution they pitched, or some feared. It’s also not going to be completely dismissed.

Do you use it regularly or develop ML/ AI applications?

Yes. I wrote my masters in engineering about MLAI (before chatgpt and YOLO became popular and viable), and am also currently working with multi-object detection and tracking using MLAI.

It’s not gonna be like the invention of the modern computer, but it’s probably gonna reach about the same level as Google, or the electronic typing machine.

I use some image generation tools and LLMs.

I think it’s a safe bet to estimate it will work out to be somewhere in the middle of the two extremes. I don’t think AI/ML is going to be worthless, but I also don’t think it’s going to do all these terrible things people are afraid of. It will find its applications and be another tool we have.

I find it fascinating how different sections of society see the tech totally differently, a lot of people seem to think because it can’t do everything it can do nothing. I’ve been fascinated by ai for decades so to have finally cracked language comprehension feels like huge news because it opens so many other doors - especially in human usability of new tools.

We’re going to see a huge shift in how we use technology, I don’t think it will be long before we’re used to telling the computer what we want it to do - organising pictures, sorting inventory in a game, finding products in shops… Being able to actually tell it ‘i want a plug for my bath’ and not being offered electrical plugs, even being told ‘there are three main types as seen here, you will need to know the size of your plug hole to ensure the correct fit’

As the technology refines we’ll see it get increasingly reliable for things like legal and medical knowledge, even if it’s just referring people to doctors it could save a huge amount of lives.

It’s absolutely going to have as much effect on our lives as the internet’s development did, but I think a lot of people forget how significant that really was.

I agree. I also think sending the request “Here is an example of a bluetooth driver for linux. It isn’t working any more be cause of a kernal update (available here). Please update the driver and test it please. You have access to the port and there is a bluetooth device available for connection. Please develop a solution, test it, write a unit test, and make commits along the way (with comments please). Also, if you have any issues, email me at [email protected], and I’ll hop back online to help you. Otherwise, keep working until you are finished and have a working driver.”

Are we there yet? No, I’ve tried some of the recursive implementations and I’m yet to have them generate something completely functional. But there is a clear path from the current technology to that implementation. Its inevitable.

Well it’s like a super tape recorder that can play back anything anyone has ever said on the internet.

But altered almost imperceptibly such that it’s entirely incorrect.

yeah, a “tape recorder” that adapts to what you ask… if there was a tape recorder before where i could put the docs i written and get recommendations on how to improve my style and organization, i missed it

Remember the time Michio Kaku went on CBS to talk about Hurricane Harvey despite not being an expert on Hurricanes? He is a clout chaser who regularly steps outside his expertise to make hot takes for attention.

I also recommend this video that touches on Michio Kaku talking about things outside his expertise.

He’s not even a top physicist, just well known.

I wouldn’t call this guy a top physicist… I mean he can say what he wants but you shouldn’t be listening to him. I also love that he immediately starts shilling his quantum computer book right after his statements about AI. And mind you that this guy has some real garbage takes when it comes to quantum computers. Here is a fun review if you are interested https://scottaaronson.blog/?p=7321.

The bottom line is. You shouldn’t trust this guy on anything he says expect maybe string theory which is actually his specialty. I wish that news outlets would stop asking this guy on he is such a fucking grifter.

I wouldn’t call this guy a top physicist… I mean he can say what he wants but you shouldn’t be listening to him.

Yeah I don’t see how he has any time to be a “top physicist” when it seems he spends all his time on as a commenter on tv shows that are tangentially related to his field. On top of that LLM is not even tangentially related.

Leading theoretical physicist Michio Kaku

I wouldn’t listen too closely to discount Neil deGrasse Tyson these days, especially in domains in which he has no qualifications whatsoever.

Just set your expectations right, and chat it’s are great. They aren’t intelligent. They’re pretty dumb. But they can say stuff about a huge variety of domains

I understand that he’s placing these relative to quantum computing, and that he is specifically a scientist who is deeply invested in that realm, it just seems too reductionist from a software perspective, because ultimately yeah - we are indeed limited by the architecture of our physical computing paradigm, but that doesn’t discount the incredible advancements we’ve made in the space.

Maybe I’m being too hyperbolic over this small article, but does this basically mean any advancements in CS research are basically just glorified (insert elementary mechanical thing here) because they use bits and von Neumann architecture?

I used to adore Kaku when I was young, but as I got into academics, saw how attached he was to string theory long after it’s expiry date, and seeing how popular he got on pretty wild and speculative fiction, I struggle to take him too seriously in this realm.

My experience, which comes with years in labs working on creative computation, AI, and NLP, these large language models are impressive and revolutionary, but quite frankly, for dumb reasons. The transformer was a great advancement, but seemingly only if we piled obscene amounts of data on it, previously unspeculated of amounts. Now we can train smaller bots off of the data from these bigger ones, which is neat, but it’s still that mass of data.

To the general public: Yes, LLMs are overblown. To someone who spent years researching creativity assistance AI and NLPs: These are freaking awesome, and I’m amazed at the capabilities we have now in creating code that can do qualitative analysis and natural language interfacing, but the model is unsustainable unless techniques like Orca come along and shrink down the data requirements. That said, I’m running pretty competent language and image models on 12GB of relatively cheap consumer video card, so we’re progressing fast.

Edit to Add: And I do agree that we’re going to see wild stuff with quantum computing one day, but that can’t discount the excellent research being done by folks working with existing hardware, and it’s upsetting to hear a scientist bawk at a field like that. And I recognize I led this by speaking down on string theory, but string theory pop science (including Dr. Kaku) caused havoc in people taking physics seriously.

He is trying to sell his book on quantum computers which is probably why he brought it up in the first place

Oh for sure. And it’s a great realm to research, but pretty dirty to rip apart another field to bolster your own. Then again, string theorist…

My opinion is that a good indication that LLMs are groundbreaking is that it takes considerable research to understand why they give the output they give. And that research could be for just one prediction of one word.

For me, it’s the next major milestone in what’s been a roughly decade-ish trend of research, and the groundbreaking part is how rapidly it accelerated. We saw a similar boom in 2012-2018, and now it’s just accelerating.

Before 2011/2012, if your network was too deep, too many layers, it would just breakdown and give pretty random results - it couldn’t learn - so they had to perform relatively simple tasks. Then a few techniques were developed that enabled deep learning, the ability to really stretch the amount of patterns a network could learn if given enough data. Suddenly, things that were jokes in computer science became reality. The move from deep networks to 95% image recognition ability, for example, took about 1 years to halve the error rate, about 5 years to go from about 35-40% incorrect classification to 5%. That’s the same stuff that powered all the hype around AI beating Go champions and professional Starcraft players.

The Transformer (the T in GPT) came out in 2017, around the peak of the deep learning boom. In 2 years, GPT-2 was released, and while it’s funny to look back on now, it practically revolutionized temporal data coherence and showed that throwing lots of data at this architecture didn’t break it, like previous ones had. Then they kept throwing more and more and more data, and it kept going and improving. With GPT-3 about a year later, like in 2012, we saw an immediate spike in previously impossible challenges being destroyed, and seemingly they haven’t degraded with more data yet. While it’s unsustainable, it’s the same kind of puzzle piece that pushed deep learning into the forefront in 2012, and the same concepts are being applied to different domains like image generation, which has also seen massive boosts thanks in-part to the 2017 research.

Anyways, small rant, but yeah - it’s hype lies in its historical context, for me. The chat bot is an incredible demonstration of the incredible underlying advancements to data processing that were made in the past decade, and if working out patterns from massive quantities of data is a pointless endeavour I have sad news for all folks with brains.

Do you have any further reading on this topic? This has been such an amazing read.

Hmm… Nothing off the top of my head right now. I checked out the Wikipedia page for Deep Learning and it’s not bad, but quite a bit of technical info and jumping around the timeline, though it does go all the way back to the 1920’s with it’s history as jumping off points. Most of what I know came from grad school and having researched creative AI around 2015-2019, and being a bit obsessed with it growing up before and during my undergrad.

If I were to pitch some key notes, the page details lots of the cool networks that dominated in the 60’s-2000’s, but it’s worth noting that there were lots of competing models besides neural nets at the time. Then 2011, two things happened at right about the same time: The ReLU (a simple way to help preserve data through many layers, increasing complexity) which, while established in the 60’s, only swept everything for deep learning in 2011, and majorly, Nvidia’s cheap graphics cards with parallel processing and CUDA that were found to majorly boost efficiency of running networks.

I found a few links with some cool perspectives: Nvidia post with some technical details

Solid and simplified timeline with lots of great details

It does exclude a few of the big popular culture events, like Watson on Jeopardy in 2011. To me it’s fascinating because Watson’s architecture was an absolute mess by today’s standards, over 100 different algorithms working in conjunction, mixing tons of techniques together to get a pretty specifically tuned question and answer machine. It took 2880 CPU cores to run, and it could win about 70% of the time at Jeopardy. Compare that to today’s GPT, which while ChatGPT requires way more massive amounts of processing power to run, have an otherwise elegant structure and I can run awfully competent ones on a $400 graphics card. I was actually in a gap year waiting to go to my undergrad to study AI and robotics during the Watson craze, so seeing it and then seeing the 2012 big bang was wild.

Well, one could argue that our brain is a glorified tape recorder.

behold! a tape recorder.

holds up a plucked chicken

He’s a physicist. That doesn’t make him wise, especially in topics that he doesn’t study. This shouldn’t even be an article.

deleted by creator

They could know quite a lot, ML is still a rather shallow field compared to the more established sciences, it’s arguably not even a proper science yet, perhaps closer to alchemy than chemistry. Max Tegmark is a cosmologist and he has learned it well enough for his opinion to count, this guy on the other hand is famous for his bad takes and has apparently gotten a lot wrong about QC even though he wrote a whole book about it.

I call them glorified spread sheets, but I see the correlation to recorders. LLMs, like most “AIs” before them, are just new ways to do line of best fit analysis.

That’s fine. Glorify those spreadsheets. It’s a pretty major thing to have cracked.

It is. The tokenization and intent processing are the thing that impress me most. I’ve been joking since the 90’s that the most impressive technological innovation shown on Star Trek TNG was computers that understand the intent of instructions. Now we have that… mostly.

To counter the grandiose claims that present-day LLMs are almost AGI, people go too far in the opposite direction. Dismissing it as being only “line of best fit analysis” fails to recognize the power, significance, and difficulty of extracting meaningful insights and capabilities from data.

Aside from the fact that many modern theories in human cognitive science are actually deeply related to statistical analysis and machine learning (such as embodied cognition, Bayesian predictive coding, and connectionism), referring to it as a “line” of best fit is disingenuous because it downplays the important fact that the relationships found in these data are not lines, but rather highly non-linear high-dimensional manifolds. The development of techniques to efficiently discover these relationships in giant datasets is genuinely a HUGE achievement in humanity’s mastery of the sciences, as they’ve allowed us to create programs for things it would be impossible to write out explicitly as a classical program. In particular, our current ability to create classifiers and generators for unstructured data like images would have been unimaginable a couple of decades ago, yet we’ve already begun to take it for granted.

So while it’s important to temper expectations that we are a long way from ever seeing anything resembling AGI as it’s typically conceived of, oversimplifying all neural models as being “just” line fitting blinds you to the true power and generality that such a framework of manifold learning through optimization represents - as it relates to information theory, energy and entropy in the brain, engineering applications, and the nature of knowledge itself.

Ok, it’s a best fit line on an n-dimentional matrix querying a graphdb ;)

My only point is that this isn’t AGI and too many people still fail to recognize that. Now people are becoming disillusioned with it because they’re realizing it isn’t actually creative. It’s still still just a fancy comparison engine. That’s not not world changing, but it’s also not Data from Star Trek

I get that, but what I’m saying is that calling deep learning “just fancy comparison engine” frames the concept in an unnecessarily pessimistic and sneery way. It’s more illuminating to look at the considerable mileage that “just pattern matching” yields, not only for the practical engineering applications, but for the cognitive scientist and theoretician.

Furthermore, what constitutes being “actually creative”? Consider DeepMind’s AlphaGo Zero model:

Mok Jin-seok, who directs the South Korean national Go team, said the Go world has already been imitating the playing styles of previous versions of AlphaGo and creating new ideas from them, and he is hopeful that new ideas will come out from AlphaGo Zero. Mok also added that general trends in the Go world are now being influenced by AlphaGo’s playing style.

Professional Go players and champions concede that the model developed novel styles and strategies that now influence how humans approach the game. If that can’t be considered a true spark of creativity, what can?

Kaku is a quack.

Theoretical physicist and a questionable one at that

More people need to learn about Racter. This is nothing new.

Thanks for good article link