cross-posted from: https://hexbear.net/post/3613920

Get fuuuuuuuuuuuuuucked

“This isn’t going to stop,” Allen told the New York Times. “Art is dead, dude. It’s over. A.I. won. Humans lost.”

“But I still want to get paid for it.”

“Famous”?

Never heard of him.

eat shit dude

ChatGPT, show me the world’s tiniest violin playing “No One Gives a Fuck” in A minor.

I wish that was true for minors 😔

Get a real job

They forgot to put “Artist” in quotes too

deleted by creator

You’re not wrong, but he also might not be either :)

“”“Artist”“”

i think “content creator” would be a better term in this case. because i’m not convinced it’s art, but it sure is “content”. maybe “content requester” would be more accurate.

“Famous” A"I" “Artist”

I said this when it was posted elsewhere- how is he calculating those millions of dollars?

RIAA and MPAA anti-piracy strategy, aka, made up bullshit.

Probably the same way companies tried to sue The Pirate Bay because the bay had lost them more money than existed in the world economy.

Removed by mod

It’s easy. He chose an arbitrary number that was very big but just shy of 99% unrealistic, according to his own flawed judgement.

Ha! Not the onion was made for this headline!

Lol.

Another idiot who thinks “prompt engineering” is a real skill and not just another step those companies are using idiots for free AI training.

You ask AI to draw a ninja turtle on a skateboard, and that “effort” they put into phrasing their request well enough for the AI to understand makes the AI learn the 10 past attempts were looking for what the 11th got

And now it won’t take ten tries to go that route

Any “skill” by the user has a very short expiration date because the next version won’t need it thanks to all the time users spent developing those “skills”.

But no one impressed with AI is smart enough to realize that. And since they’re the on s training the AI…

Idiots in, idiots out

You don’t have a clue how ai works do you?

Can you point out what’s supposedly wrong with their comment or are you just claiming that every critic of so-called “AI” doesn’t have a clue to justify the hype?

I don’t know about that, in particular, because people generally add more detail, but it teaches the AI what kind of detail to add. So if you’re not picky, then yeah, the AI learns from that kind of thing.

As far as it being a useful skill, I don’t think it was in the first place. “Prompt engineer” has always been a joke. It’s like being a “sandwich artist”. Everyone can do it with one day of practice.

“Promp engineering” is as useful skill as Google fu used to be.

I completely agree. I wonder whether some IT bachelor’s degrees now have lessons in AI prompting. I remember in 2005 there was a course we had to do which could’ve been labeled “[shitty] Google-Fu” or something. “information searching” is what it would more or less translate to. Basically searching using Google and library searches well. And I don’t mean “library” in the IT-context, but actual libraries. With books. Just had to use the search tools the locals libraries had.

Such a fucking filler class.

In my year like 60 started, two classes. After three years like 8 graduated.

I’ve worked with tons of people who do not understand how to effectively use search engines. Maybe this was done poorly but it seems reasonable enough to me in principle.

It’s kinda dead now due to enshittification but the vast majority of humans I’ve interacted with could use a class on how to use a search engine.

Edit- it could be made more modern by showing how to ignore sponsored stuff, blatantly SEO shit, AI shit, etc

If the class had actually had any useful information in it, sure.

It was not the greatest class.

Im old enough to have to learn to use AND, OR and NOT to be used on search engines.

My email service, Port87, uses boolean operators in its search language. Polish notation, even!

And the library that does it is open source:

Boolean operators!

I use ai when I use search engines. This makes the search engines better. I also use ai when I get spotify suggestions. I use ai when I use autocorrect. I use ai without even realizing I’m using ai and the ai improves from it, and I and many other people get an improved quality of life from it, that’s why nearly everyone uses it just like I do.

So, @givesomefucks , do you also regularly use ai that improves from from your usage? Or are you not a hypocrite who thinks there is something morally bad about specific ais that you don’t like while doing exactly what you claim to be against with other ais? How are your moral lines drawn?

Thanks for the example!

Whether an individual determines AI “smart” depends on how smart the person is. We’re all all our own frame of reference.

I have no doubt AI impresses you every day of your life, even stuff that’s not AI apparently, because not all of your examples were.

You are just ignorant of the history and evolution of the term “AI”. It’s easy for anyone to learn about it’s history, your point of view is just one of ignorance of the past.

I think you were downvoted by people who think “AI” was invented in the past decade.

I use ai when I use search engines. This makes the search engines better.

They didn’t say better for whom.

Thanks for demonstrating what a useless term “AI” is when you’re not trying to sell snake oil.

Every word in every language changes over time. The term AI changing is the absolute normal. It’s not some mark against it.

Current llms are phenomenally beneficial for some things. Millions of developers have had their entire careers completely changed. Teachers are able to grade work in 10% of the time. Children through to college students and anyone interested in learning have infinitely patient tutors on demand 24 hours a day. The fact that you are completely clueless about what is going on doesn’t by any stretch of the imagination mean it isn’t happening. It just means that you not only feel like you are “beyond learning”, it also means that you don’t even have people in your life that are still interested in personal growth, or you are too shallow to have conversations with anyone who is.

This is just beginning. The more you cling to being in denial of progress, the further you will get behind. You are denying any mode of transportation other than horses even exists, while people are routinely flying around the world. It most likely won’t be too long until your mindset is widely accepted as a mental disorder.

Every word in every language changes over time. The term AI changing is the absolute normal. It’s not some mark against it.

Lumping machine learning algorithms, llms, regressive learning, search algorithms all in one bucket and calling it “AI” serves no proper purpose. There is no consensus, it’s not a clear definition, it’s not convenient and it only helps sell bullshit. Llms aren’t intelligent. Calling them that is the opposite of useful.

Current llms are phenomenally beneficial for some things.

Namely: the portfolio of tech shareholders and grifters.

Millions of developers have had their entire careers completely changed.

Lol, no. What’s your source for this?

Teachers are able to grade work in 10% of the time.

Poor students.

Children through to college students and anyone interested in learning have infinitely patient tutors on demand 24 hours a day.

Have you heard of the stories where students believed some AI bullshit more than what their teacher told them? Great “tutor” you have there.

The fact that you are completely clueless about what is going on

Sure, bud. /s

It just means that you not only feel like you are “beyond learning”, it also means that you don’t even have people in your life that are still interested in personal growth, or you are too shallow to have conversations with anyone who is.

Oh, please tell me more about my life, stranger on the internet! /s

What an asshole, seriously.

Have fun in your tech cult, you ableist bootlicker.

Yesterday’s AI is today’s normal technology, this is just what keeps happening. Some people just keep forgetting how rapidly things are changing.

You’ll join this “cult” once the masses do, just like you have been doing all along. Some of us are just out here a little bit in the future. You will be one of us when you think it becomes cool, and then you will self-righteously act like you were one of us all along. That’s just what weak-minded followers do. They try to seem like they knew all along where the world was headed without ever trying to look ahead and ridiculing anyone who does.

The thing you’re evangelizing only leads to more consolidation of power and money, loss of jobs and power for the working class and climate devastation.

Yeah, technological progress has historically made life worse for humans.

There is a reason why you point to examples from years ago, that’s because that is where you are still stuck.

Students “correcting” their teachers on AI bullshit isn’t “from years ago”.

Old examples of AI I counted used to be the bleeding edge of AI research. Now they’re an old hat. The same thing will happen to LLMs. And LLMs won’t lead to so-called “AGI”, just like the other examples didn’t.

Fucking rekt, gd, do y’all have some history?

Think he’ll try to use a llm as his lawyer?

This article is annoyingly one-sided. The tool performs an act of synthesis just like an art student looking at a bunch of art might. Sure, like an art student, it could copy someone’s style or even an exact image if asked (though those asking may be better served by torrent sites). But that’s not how most people use these tools. People create novel things with these tools and should be protected under the law.

It’s deterministic. I can exactly duplicate your “art” by typing in the same sentence. You’re not creative, you’re just playing with toys.

That’s actually fundamentally untrue, like independent of your opinion, I promise that when people generate an image with a phrase it will be different and is not deterministic ( not in the way you mean ) .

You and I cannot type the same prompt into the same AI generative model and receive the same result, no system works with that level of specificity, by design.

They pretty much all use some form of entropy / noise.

deleted by creator

It’s literally as true as it can possibly be. Given the same inputs (including the same seed), a diffusion model will produce exactly the same output every time. It’s deterministic in the most fundamental meaning of the word. That’s why when you share an image on CivitAI people like it when you share your input parameters, so they can duplicate the image. I have recreated the exact same images using models from there.

Humans are not deterministic (at least as far as we know). If I give two people exactly the same prompt, and exactly the same “training data” (show them the same references, I guess), they will never produce the same output. Even if I give the same person the same prompt, they won’t be able to reproduce the same image again.

I do actually believe that everything, including human behavior is deterministic. I also believe there is nothing special about human consciousness or creation tbh

Try it out and show us the result.

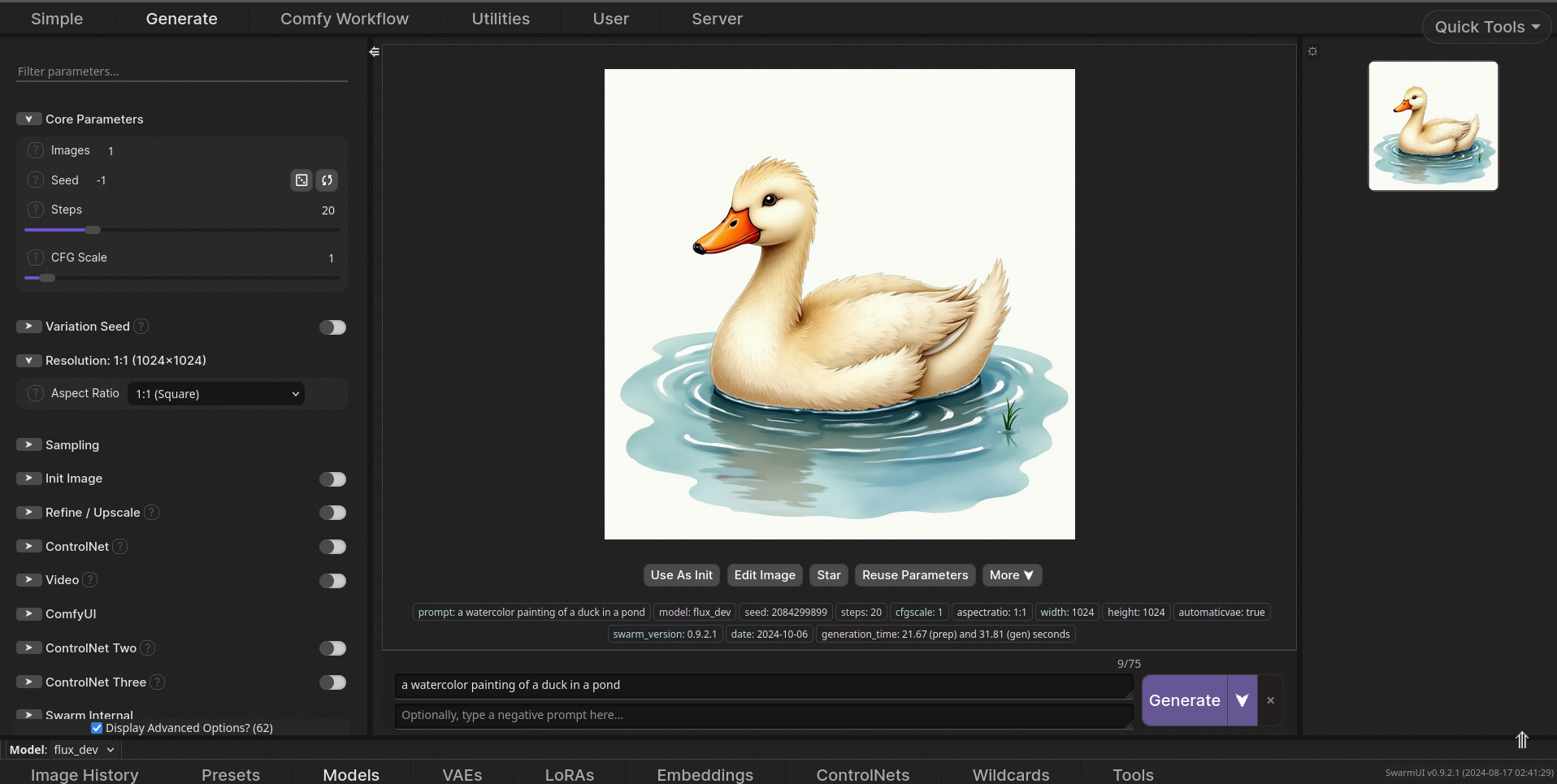

Ok, here’s an image I generated with a random seed:

Here’s the UI showing it as a result:

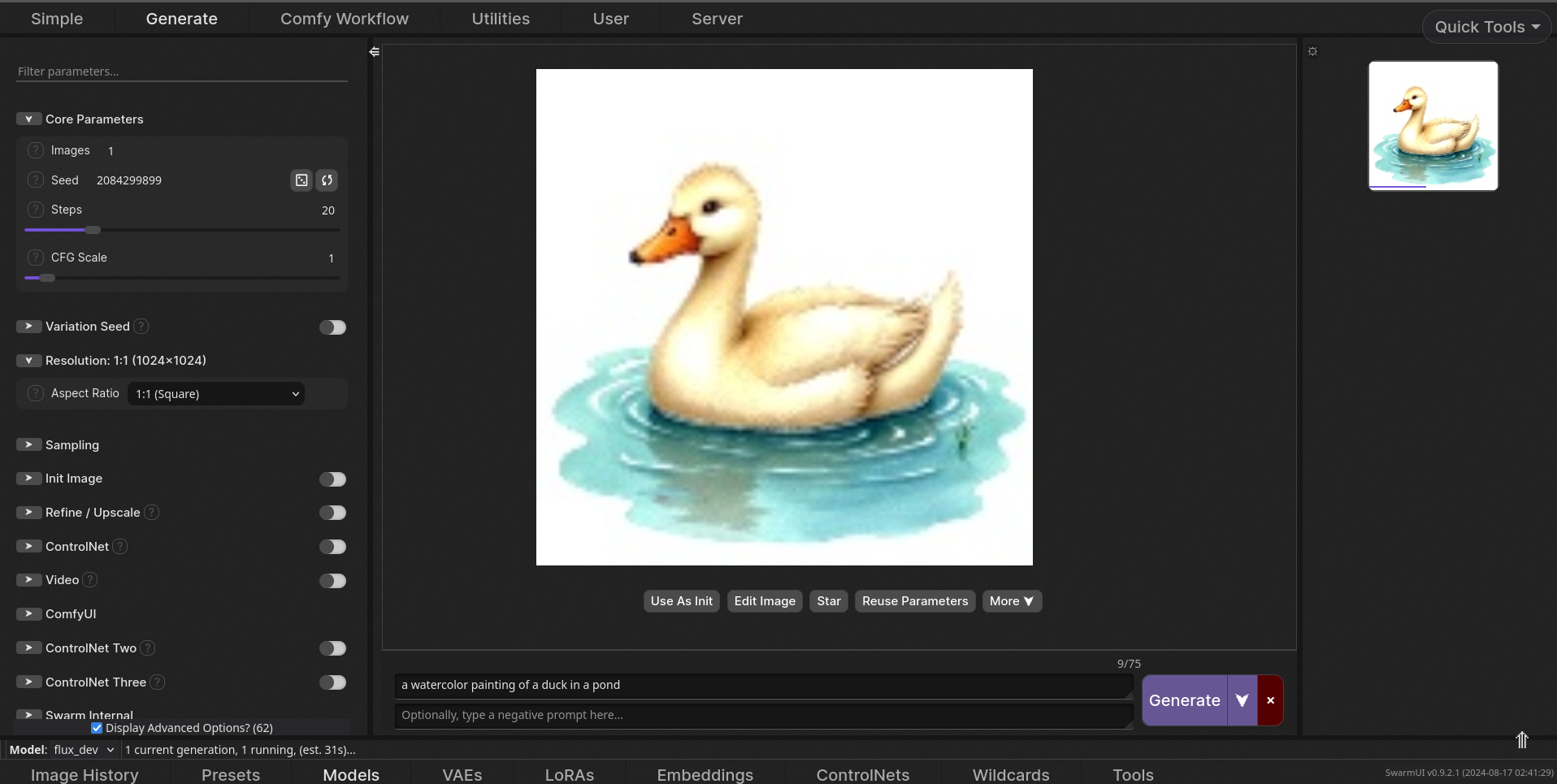

Then I reused the exact same input parameters. Here you can see it in the middle of generating the image:

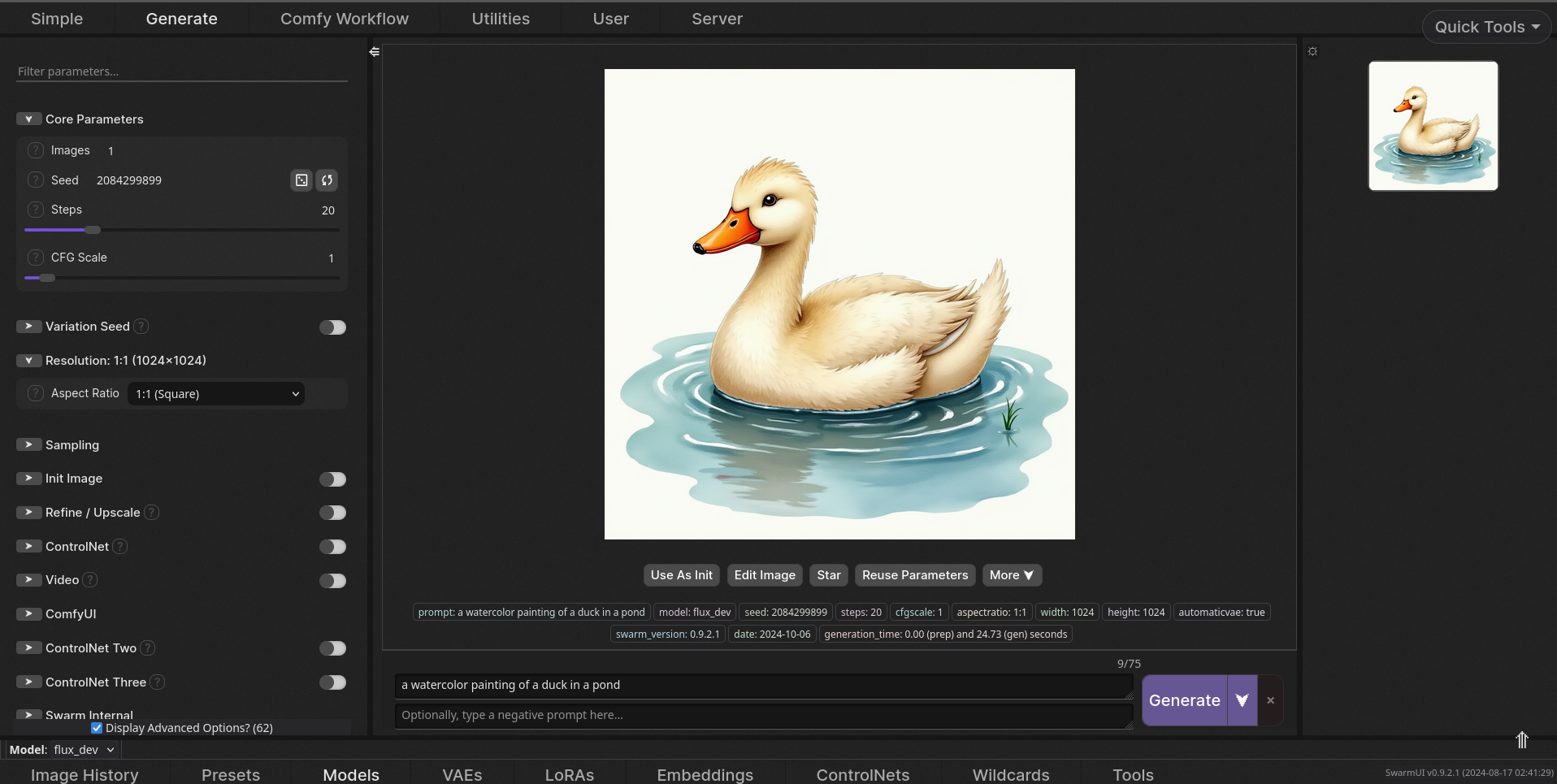

Then it finished, and you can see it generated the exact same image:

Here’s the second image, so you can see for yourself compared to the first:

You can download Flux Dev, the model I used for this image, and input the exact same parameters yourself, and you’ll get the same image.

But you’re using the same seed. Isn’t the default behaviour to use random seed?

And obviously, you’re using the same model for each of these, while these people would probably have a custom trained model that they use which you have no access to.

That’s not really proof that you can replicate their art by typing the same sentence like you claimed.

If you didn’t understand that I clearly meant with the same model and seed from the context of talking about it being deterministic, that’s a you problem.

Bro, it’s you who said type the same sentence. Why are you saying the wrong things and then try to change your claims later?

The problem is that you couldn’t be bothered to try and say the correct thing, and then have the gall to blame other people for your own mistake.

And in what kind of context does using the same seed even makes sense? Do people determine the seed first before creating their prompt? This is a genuine question, btw. I’ve always thought that people generally use a random seed when generating an image until they find one they like, then use that seed to modify the prompt to fine tune it.

In the context that I’m explaining that the thing is deterministic. Do you disagree? Because that was my point. Diffusion models are deterministic.

So what you’re saying is that the AI is the artist, not the prompter. The AI is performing the labor of creating the work, at the request of the prompter, like the hypothetical art student you mentioned did, and the prompter is not the creator any more than I would be if I kindly asked an art student to paint me a picture.

In which case, the AI is the thing that gets the authorial credit, not the prompter. And since AI is not a person, anything it authors cannot be subjected to copyright, just like when that monkey took a selfie.

It should be as copyrightable as the prompt. If the prompt is something super generic, then there’s no real work done by the human. If the prompt is as long and unique as other copyrightable writing (which includes short works like poems) then why shouldn’t it be copyrightable?

If the prompt is as long and unique as other copyrightable writing (which includes short works like poems) then why shouldn’t it be copyrightable?

Okay, so the prompt can be that. But we’re talking about the output, no? My hello-world source code is copyrighted, but the output “hello world” on your machine isn’t really, no?

Does it require any creative thought for the user to get it to write “hello world”? No. Literally everyone launching the app gets that output, so obviously they didn’t create it.

A better example would be a text editor. I can write a poem in Notepad, but nobody would claim that “Notepad wrote the poem”.

It’s wild to me how much people anthropomorphize AI while simultaneously trying to delegitimize it.

Because it wasn’t created by a human being.

If I ask an artist to create a work, the artist owns authorship of that work, no matter how long I spent discussing the particulars of the work with them. Hours? Days? Months? Doesn’t matter. They may choose to share or reassign some or all of the rights that go with that, but initial authorship resides with them. Why should that change if that discussion is happening not with an artist, but with an AI?

The only change is that, not being a human being, an AI cannot hold copyright. Which means a work created by an AI is not copyrightable. The prompter owns the prompt, not the final result.

You’re assigning agency to the program, which seems wrong to me. I think of AI like an advanced Photoshop filter, not like a rudimentary person. It’s an artistic tool that artists can use to create art. It does not in and of itself create art any more than Photoshop creates graphics or a synthesizer creates music.

How do the actions of the prompter differ from the actions of someone who commissions an artist to create a work of art?

I don’t think commissioning a work is ever as hands-on as using a program to create a work.

I suspect the hangup here is that people assume that using these tools requires no creative effort. And to be fair, that can be true. I could go into Dall-E, spend three seconds typing “fantasy temple with sun rays”, and get something that might look passable for, like, a powerpoint presentation. In that case, I would not claim to have done any artistic work. Similarly, when I was a kid I used to scribble in paint programs, and they were already advanced enough that the result of a couple minutes of paint-bucketing with gradients might look similar to something that would have required serious work and artistic vision 20 years prior.

In both cases, these worst-case examples should not be taken as an indictment of the medium or the tools. In both cases, the tools are as good as the artist.

If I spend many hours experimenting with prompts, systematically manipulating it to create something that matches my vision, then the artistic work is in the imagination. MOST artistic work is in the imagination. That is the difference between an artist and craftsman. It’s also why photography is art, and not just “telling the camera to capture light”. AI is changing the craft, but it is not changing the art.

Similarly, if I write music in a MIDI app (or whatever the modern equivalent is; my knowledge of music production is frozen in the 90s), the computer will play it. I never touch an instrument, I never create any sound. The art is not the sound; it is the composition.

I think the real problem is economic, and has very little to do with art. Artists need to get paid, and we have a system that kinda-sorta allows that to happen (sometimes) within the confines of a system that absolutely does not value artists or art, and never has. That’s a real problem, but it is only tangentially related to art.

should a camera also own the copyright to the pictures it takes? (I seriously hate photographers)

Ah, but there is a fundamental difference there. A photographer takes a picture, they do not tell the camera to take a picture for them.

It is the difference between speech and action.

The tool performs an act of synthesis just like an art student looking at a bunch of art might.

Lol, no. A student still incorporates their own personality in their work. Art by humans always communicates something. LLMs can’t communicate.

People create novel things with these tools and should be protected under the law.

I thought it’s “the tool” the “performs an act of synthesis”. Do people create things, or the LLM?

No no, he created the prompt. That’s the artistic value /s

the machine learning model creates the picture, and does have a “style”, the “style” has been at least partially removed from most commercial models but still exist.

It doesn’t have a “style”. It stores a statistical correlation of art styles.

different models will have been trained on different ratios of art styles, one may have been trained on a large number of oil paintings and another pencil sketches, these models would provide different outputs to the same inputs.

You’re not stating anything different than my “correlation” statement.

Not the Onion. This was unexpected…