- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

Google is embedding inaudible watermarks right into its AI generated music::Audio created using Google DeepMind’s AI Lyria model will be watermarked with SynthID to let people identify its AI-generated origins after the fact.

People are listening to AI generated music? Someone on Bluesky put (paraphrased slightly) it best-

If they couldn’t put time into creating it I’m not going to put time into listening to it.

I think I’d rather listen to some custom AI generated music than the same royalty free music over and over again.

In both cases they’re just meant to be used in videos and stuff like that, you’re not supposed to actually listen to them.

Fun fact: Steve1989MREInfo uses all of his original music for his videos.

This is the ultimate YouTuber power move. Exurb1a and RetroGamingNow do it too!

A number of Youtubers do . . . and some of it’s even good, lol. John at Plainly Difficult and Ahti at AT Restorations are two that use their own music that I can think of off the top of my head.

Sam with Geowizard. actually quite a few “big” channels do which is awesome

People are using AI tools to do crazy stuff with music right now. It’s pretty great

Human performance but AI voice: https://www.youtube.com/watch?v=gbbUWU-0GGE

Carl Wheezer covers: https://www.youtube.com/watch?v=65BrEZxZIVQ

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=gbbUWU-0GGE

https://www.piped.video/watch?v=65BrEZxZIVQ

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

You tell 'em, bot. 🙌🏽

Can it be much different from the mass-market auto-tuned pap that gets put out today?

The singers of that music actually have to use their voice to sing into a mic compared to someone on a computer typing in a prompt.

As much as I dislike modern pop music, I will definitely say they put in more work than the people who rely solely on an AI that will do all the work based on a prompt.

My own feelings on the matter aside (fuck google and all that) this has been something chased after for a long time. The famous composer Raymond Scott dedicated the back end of his life trying to create a machine that did exactly this. Many famous musical creators such as Michael Jackson were fascinated by the machine and wanted to use it. The problem was is he was never “finished”. The machine worked and it could generate music, it’s immensely fascinating in my opinion.

If you want more information in podcast format check out episode 542 of 99% invisible or here https://www.thelastarchive.com/season-4/episode-one-piano-player

They go into the people who opposed Scott and why they did, and also talk about the emotion behind music and the artists, and if it would even work. Because the most fascinating part of it all was that the machine was kind of forgotten and it no longer works. Some currently famous musicians are trying to work together to restore it.

The question then is, if someone created their life’s work and modern musicians spend an immense amount of time restoring the machine, when the machine creates music does that mean no one spent time on it? I enjoy debating the philosophy behind the idea in my head, especially since I have a much more negative view when a modern version of this is done by Google.

I feel like the machine itself would be the art in that case, not necessarily what it creates. Like if someone spent a decade making a machine that could cook FLAWLESS BEEF WELLINGTON, the machine would be far more impressive and artistic than the products it made

i mean, where do you draw the line necessarily between the machine and what it creates? the machine itself is totally useless without inputs and outputs, not to say art needs utility. the beef wellington machine is only notable on its ability to conjure beef wellington, otherwise it’s just a nothing machine. which is still kind of cool, I guess, but the beef wellington machine not making beef wellington is kind of a disregard for the core part of the machine, no?

That was a great episode of 99PI. Would love the machine restored.

IIRC, It’s not so much that it made music, but that it would create loops through iteration to inspire people. He wanted it to make full busic but it was never close to that

Yeah I think you’re right, and it was apparently actually random. The longer it would play a loop the more it would iterate. Such a cool thing to exist

You will still listen to it, watching movies, advertisements, playing video games…

This is the worst timeline

This is the worst time line so far.

Not yet.

Yikes. TIL you think music sounds good based on how much time went into making it, not how it actually sounds.

Can’t wait for you to hear something you like then pretend it’s bad when you find out it was made by AI.

This assumes music is made and enjoyed in a void. It’s entirely reasonable to like music much more if it’s personal to the artist. If an AI writes a song about a very intense and human experience it will never carry the weight of the same song written by a human.

This isn’t like food, where snobs suddenly dislike something as soon as they find out it’s not expensive. Listening to music often has the listener feel a deep connection with the artist, and that connection is entirely void if an algorithm created the entire work in 2 seconds.

What if an AI writes a song about its own experience? Like how people won’t take its music seriously?

It will depend on whether or not we can empathize with its existence. For now, I think almost all people consider AI to be just language learning models and pattern recognition. Not much emotion in that.

just language learning models

That’s because they are just that. Attributing feelings or thought to the LLMs is about as absurd as attributing the same to Microsoft Word. LLMs are computer programs that self optimise to imitate the data they’ve been trained on. I know that ChatGPT is very impressive to the general public and it seems like talking to a computer, but it’s not. The model doesn’t understand what you’re saying, and it doesn’t understand what it is answering. It’s just very good at generating fitting output for given input, because that’s what it has been optimised for.

Glad you’re at least open to the idea.

“I dunno why it’s hard, this anguish–I coddle / Myself too much. My ‘Self’? A large-language-model.”

I noticed some of your comments are disappearing from this thread, is that you or mods?

I’m getting nuked from another thread

Language models dont experience things, so it literally cannot. In the same way an equation doesnt experience the things its variables are intended to represent in the abstract of human understanding.

Calling language models AI is like calling skyscrapers trees. I can sorta get why you could think it makes sense, but it betrays a deep misunderstanding of construction and botany.

What makes your experiences more valid than that of AI?

It is not a measure of validity. It is a lack of capacity.

What is the experience of a chair? Of a cup? A drill? Do you believe motors experience, while they spin?

Language models arent actual thought. This isnt a discussion about if non organic thought is equivalent to organic thought. Its an equation, that uses words and the written rules of syntax instead of numbers. Its not thinking, its a calculator.

The only reason you think a language model can experience is because a marketing man missttributed it the name “AI.” Its not artificial intelligence. Its a word equation.

You know how we get all these fun and funny memes where you rephrase a question, and you get a “rule breaking” answer? Thats because its an equation, and different inputs avoid parts of the calculation. Thought doesnt work that way.

I get that the calculator is very good at calculating words. But thats all it is. A calculator.

What makes your thoughts ‘actual thought’ and not just an algorithm?

Oddly, I’d find a piece of music written by an ai convinced it was a chair extremely artistic lol. But yeah, just because the algorithm that’s really good at putting words together is trying to convince you it has feelings, doesn’t mean it does.

That’s a parasocial relationship and it’s not healthy, sure Taylor Swift is kinda expressing her emotions from real failed relationships but you’re not living her life and you never will. Clinging to the fantasy of being her feels good and makes her music feel special to you but it’s just fantasy.

Personally I think it would be far better if half the music was ai and people had to actually think if what their listing to actually sounds good and interesting rather than being meaningless mush pumped out by an image obsessed Scandinavian metal nerd or a pastiche of borrowed riffs thrown together by a drug frazzled brummie.

Lol, somehow you got the above commenter covering the sentiment that a song is better if it’s message is true to its creator…something a huge percentage of the population would agree with, and you equate that to fan obsession.

People on the internet are wild.

I don’t understand where they got any of that from, lol. It’s like they learned what a parasocial relationship is earlier today and they thought it applied here

I would kind of agree with this if it wasn’t kind of mean and half of it didn’t come out of nowhere, but then it also seems like what you think you value in your own music taste is whether or not something is new, seeing as your main examples of things that are meaningless or bad is “image obsessed scandinavian metal nerd” i.e. derivative and “pastiche of borrowed riffs thrown together by a drug frazzled brummie” i.e. derivative.

Ha no you are right, I was being a dick - i worked long enough in the music industry that it’s scarred my soul and just thinking of it brings up that bile…

But yeah I was just being silly with the band descriptions, I was describing some of the music I like in a flippant way to highlight the absurdity of claiming some great artistic value because Ozzy mumbled about iron man traveling time for the future of mankind - dice could come up with more meaningful lyrics than ‘nobody helps him, now he has his revenge’ is the sort of thing an edgy teenage coke head would come up with – it’s one of my favourite songs of all time, another example of greatest songs of all time is Rasputin by boney m, famously part of a big controversy when people discovered they were a manufactured band and again the lyrics and music are both brilliant and awful.

People obsess over nonsence all the time, it’s easy to pretend there’s some deep and holy difference between Bach and Offenbach but the cancan can mean just as much as any toccata if you let it.

Art is in the eye of the beholder, it has always been thus and will always be thus.

I can’t tell if you’re completely missing the point on purpose, or if you actually don’t understand what I mean lol. Who said anything about Taylor Swift?

You can replace her with whatever music you associate with, what I’m getting at is your connection to it isn’t real - it feels real but that’s because it’s coming from you, you’re putting the meaning in there.

If you could erase all memory of Bach from a classical obsessives mind then play them his greatest hits and say it’s from an AI they’d say ‘ugly key smashing meaningless drivel’ maybe they’d admit AI Brahms has some bangers but without the story behind it and the history of its significance it’s not as magical.

The problem I have is people are to addicted to shortcuts, ‘oh this is Bach people say he’s great so this cello suite must be good therefore I like it’ it’s lazy and dumb. (I use Bachs cello suite as an example because it’s what’s on the radio but you can put any bit of music as the example)

deleted by creator

I don’t think that’s OPs point, but it’s interesting how many classic songs were written in less than 30 minutes

As someone that’s more than dabbled in making music, the best tracks I made all came out rather quickly, they still needed a lot of work to finish/polish but tracks that I would spend hours coming up with the core elements would usually be trash and end in the bin, the good stuff would just…happen.

deleted by creator

deleted by creator

deleted by creator

That’s not really a gotcha though. They’re saying they aren’t going to actively seek out and listen to auto-generated music. If they happen to hear some and like it, that wouldn’t mean they actively sought it out and listened to it.

I’m not going to put time into listening to it.

Right, they’re not going to actively put time into listening to music generated by AI.

Hearing music made by AI because it happens to be playing is different from knowingly listening to it. It’s alarming that you need this spelled out so much.

Don’t know why you’re being downvoted, you’re completely right. I’d never seek out to listen to something with no human thought process behind it

Ok, boomer.

How’s that microwave dinner taste? Like an A for effort? Yeah, I bet.

deleted by creator

deleted by creator

A spectrum analysis and bandpass filter should take care of that.

chuckles contemptfully in Audacity

So we’ll just need another AI to remove the watermarks… which I think already exists.

Don’t even need AI. Basic audio editing works.

Lately in youtube I’m constantly been bombarded with ai garbage music passed as a normal unknown bands and it’s getting really annoying. What will happen when there’s an actual new band but everyone ignores them because you would think it’s just ai?

What will happen when there’s an actual new band but everyone ignores them because you would think it’s just ai?

Their music will speak for itself and elevate them above the AI that is making worse music.

You’re asking the wrong question. What happens when you hear something you like, then find out it’s made by AI and all of a sudden you have to pretend you never liked it?

A needle in a haystack is much harder to find if the haystack is the size of a truck. People don’t have infinite time to listen to music, and if it’s almost all the same, they’ll stop trying to find upcoming artists, ai or not.

We need an ai to listen to music and tell us what to like by playing it on repeat till we do. Just like the radio stations.

I think probably the vast majority of up and comers in the music scene come from, not just randomly going viral (which I don’t think will be a concern with AI music anyways since it will probably sound like shit for the next 50+ years), but probably comes from just trolling around and doing local shows in venues that they know will attract that people who like the noise. I don’t think it’s very hard to distinguish between AI and people in that context.

Music snobs have been doing this for decades, pretending to like the shittiest pink Floyd b-side because the normies don’t get it and acting like Abba’s entire catalogue isn’t solid bangers because disco isn’t cool, until it was again then they’d always loved it.

It’ll be just like it always is, Pete Seagar with an axe trying to stop Bob Dylan playing an electric guitar. I remember when people hated d&b and said it wasn’t real music and all that shit now they’re all telling bullshit stories about how they were og junglist massive.

People will use ai to make really cool things and a loud portion of the population will act superior by pretending it’s bad, time will pass and when the next thing comes along all those people will point at the ai music and say ‘your new music will never be as good as real music like that’ but the people listening to atonal arithmic echolocation beats to study to or whatever the next trend is won’t pay them any attention.

Yeah, I’ve noticed at a young age most people base their tastes in art around what their peers will accept.

As soon as their peers say AI is ok, they will follow suit.

ai garbage music

actual new band but everyone ignores them because you would think it’s just ai

I think you answered your own question.

Omg the AI voice describing a short is infuriating.

“This man was minding his own business not knowing he was about to change this child’s life…Watch how his interaction is measured…”

Dots Do not recommend this channel again

This raises the question of will AI style be the next big trend? Imagine if real painters started painting oil paintings that look uncanny and surreal like an Ai generated art, weird hands, or weird eyes. Imagine if a real quartet decided to play an AI generated piece of music.

The Audacity!

Hehe.

This is the best summary I could come up with:

Audio created using Google DeepMind’s AI Lyria model, such as tracks made with YouTube’s new audio generation features, will be watermarked with SynthID to let people identify their AI-generated origins after the fact.

In a blog post, DeepMind said the watermark shouldn’t be detectable by the human ear and “doesn’t compromise the listening experience,” and added that it should still be detectable even if an audio track is compressed, sped up or down, or has extra noise added.

President Joe Biden’s executive order on artificial intelligence, for example, calls for a new set of government-led standards for watermarking AI-generated content.

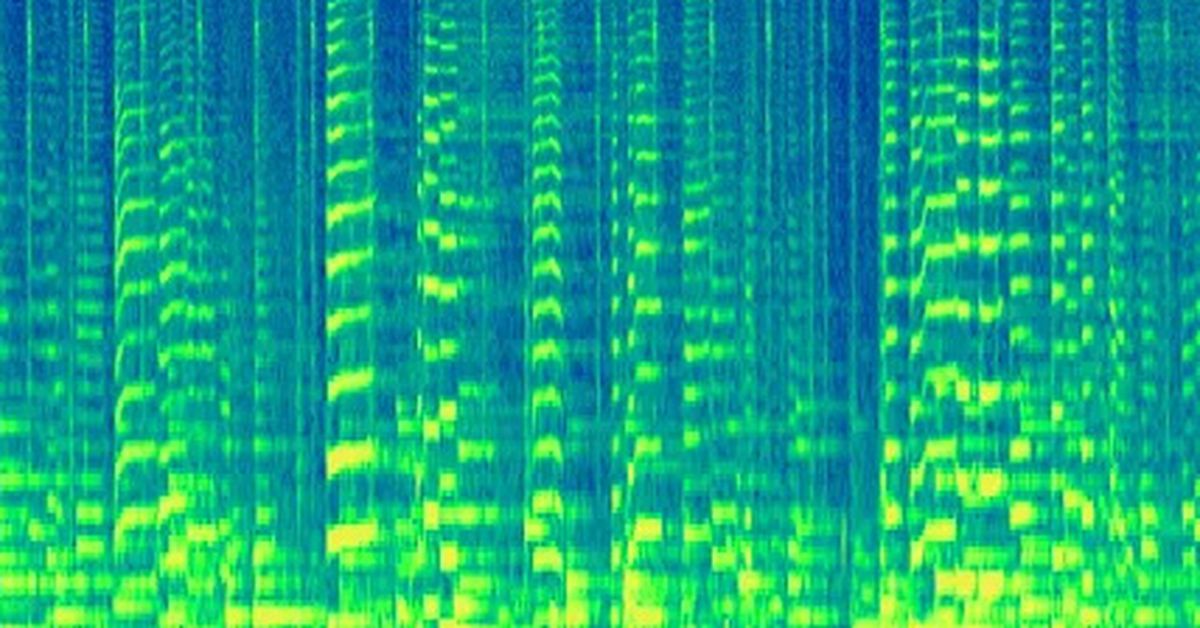

According to DeepMind, SynthID’s audio implementation works by “converting the audio wave into a two-dimensional visualization that shows how the spectrum of frequencies in a sound evolves over time.” It claims the approach is “unlike anything that exists today.”

The news that Google is embedding the watermarking feature into AI-generated audio comes just a few short months after the company released SynthID in beta for images created by Imagen on Google Cloud’s Vertex AI.

The watermark is resistant to editing like cropping or resizing, although DeepMind cautioned that it’s not foolproof against “extreme image manipulations.”

The original article contains 230 words, the summary contains 195 words. Saved 15%. I’m a bot and I’m open source!

it does this by converting the audio into a 2d visualisation that shows how the spectrum of frequencies evolves in a sound over time

Old school windows media player has entered the chat

Seriously fuck off with this jargon, it doesn’t explain anything

That’s actually an accurate description of what is happening: an audio file turned into a 2d image with the x axis being time, the y axis being frequency and color being amplitude.

That’s literally a spectrograph

Spectrogram*

Your mom’s literally a spectrograph.

I know, it’s like the old windows media player visualisations.

Sounds like a bad journalist hasn’t understood the explanation. A spectrogram contains all the same data as was originally encoded. I guess all it means is that the watermark is applied in the frequency domain.

Also this isn’t new by any stretch… Aphex Twin would like a word

Well, encoding stuff in the spectrogram isn’t new, sure. But encoding stuff into an audio file that is inaudible but robust to incidental modifications to the file is much harder. Aphex Twin’s stuff is audible!

I would like to know what it is that makes it so robust. The article explains very little. Is it in the high frequencies? Higher than the human ear can hear? Compression will effect that plus that’s going to piss dogs off. Could be something with the phasing too. Filters and effects might be able to get rid of the water mark

I don’t know what frequencies are annoying for dogs but I’m guessing it’s above 24kHz so no sound file or sound system is going to be able to store or produce it anyway.

There will certainly be some way to get rid of the watermark. But it might nevertheless persist through common filters.

thats like putting a watermark besides the Bill.if it is inaudible then you can just delete it

I wonder if being able to generate music will make people less interested in actually bothering to learn how to do it themselves. Having ai tool makes many things so much easier and you need to have only rudimentary understanding of the subject.

Yeah, like most people don’t realise but until about 1900 most piano music was played by humans, of course there were no pianists after the invention of the pianola with its perforated rolls of notes and mechanical keys.

It’s sad, drums were things you hit with a stick once but Mr Theramin ensured you never see a drummer anymore, while Mr Moog effectively ended bass and rhythm guitars with the synthesizer…

It’s a shame it would be fun to go see a four piece band performing live but that’s impossible now no one plays instruments anymore.

People are never going to stop learning to play instruments, if anything they’ll get inspired by using AI to make music and it’ll get them interested in learning to play, they’ll then use ai tools to help them learn and when they get to be truly skilled with their instrument they’ll meet up with some awesomely talented friends to form a band which creates painfully boring and indulgent branded rock.

Those are a bit of false equivalencies, because all of them still required human input to work. AI generated music can be entirely automated, just put in a prompt and tell it to generate 10 and it’ll do the rest for you. Set up enough servers and write enough prompts and you can have hundreds of distinct and unique pieces of AI music put online every minute.

Realistically, putting aside sentimental value, there isn’t a single piece of music that humans have made that an AI couldn’t make. But I hope your optimism turns out to be right :/

I sort of think this is looking at it wrong. That’s looking at music more like a product to be consumed, rather than one which is to be engaged with on the basis that it engenders human creativity and meaning. That’s sort of why this whole debate is bad at conception, imo. We shouldn’t be looking at AI as a thing we can use just to discard music from human hands, or art, or whatever, we should be looking at it as a nice tool that can potentially increase creativity by getting rid of the shit I don’t wanna deal with, or making some part of the process easier. This is less applicable to music, because you can literally just burn a CD of riffs, riffs, and more riffs (buckethead?), but for art, what if you don’t wanna do lineart and just wanna do shading? Bad example because you can actually just download lineart online, or just paint normal style, lineless or whatever. But what if you wanna do lineart without shading and making “real” or “whole” art? Bad example actually you can just sketch shit out and then post it, plenty of people do. But you get the point, anyways.

Actually, you don’t get the point because I haven’t made one. The example I always think of is klaus. They used AI, or neural networks, or deep learning or matrix calculation or whatever who cares, to automate the 3 dimensional shading of the 2d art, something that would be pretty hard to do by hand and pretty hard to automate without AI. To do it well, at least. That’s an easy example of a good use. It’s a stylistic choice, it’s very intentional, it distinguishes the work, and it does something you couldn’t otherwise just do, for this production, so it has increased the capacity of the studio. It added something and otherwise didn’t really replace anyone. It enabled the creation of an art that otherwise wouldn’t have been, and it was an intentional choice that didn’t add like bullshit, it allowed them to retain their artistic integrity. You could do this with like any piece of art, so you desired. I think this could probably be the case for music as well, just as T-pain uses autotune (or pitch correction, I forget the difference) to great effect.

I like these examples. Taken to the extreme, I would still consider a piece of ai generated sheet music played by a human musician to be art, but I guess it’s all subjective in the end. For music specifically, I’ve always been more into the emotional side of it, so as long as the artist is feeling then I can appreciate it.

For sure it would be art, there are a bunch of ways to interpret what’s going on there. Maybe the human adds something through the expression of the timing of how they play the piece, so maybe it’s about how a human expresses freedom in the smallest of ways even when dictated to by some relatively arbitrary set of rules. Maybe it’s about how both can come together to create a piece of music harmoniously. Maybe it’s about the inversion of the conventional structure of how you would compose music and then it would be spread on like, hole punched paper to automated pianos, how now the pianos write the songs and the humans play them. Maybe it’s about how humans are oppressed by the technology they have created. Maybe it’s about all of that, maybe it’s about none of that, maybe some guy just wanted to do it cause it was cool.

I think that’s kind of why I think. I don’t dislike AI stuff, but I think people think about it wrong. Art is about communication, to me. A photo can be of purely nature, and in that way, it is just natural, but the photographer makes choices when they frame the picture. What perspective are they showing you? How is the shot lit? What lens? yadda yadda. Someone shows you a rock on the beach. Why that rock specifically? With AI, I can try to intuit what someone typed in, in order to get the output of a picture from the engine, I can try to deliberate what the inputs were into the engine, I can even guess which outputs they rejected, and why they went with this one over those. But ultimately I get something that is more of a woo woo product meant to impress venture capital than something that’s made with intention, or presented with intention. I get something that is just an engine for more fucking internet spam that we’re going to have to use the same technology to try and filter out so I can get real meaning and real communication, instead of the shadows of it.

I believe it will depend on a couple different factors. Putting keywords into a generator isn’t the same as laying your hands on an instrument, being able to physically play it yourself. However, if the result is so perfect and beautiful that a person could have never possibly come up with it on their own, it might be discouraging (but I can’t really see that happening)

Maybe but people who are good at things already can use it as a tool to be better. You can combine the skills you do have with ai for the skills you don’t have to make something you never could have before.

I like to make games and for me this means I could make my own game music. I just don’t have the skills to do that on my own and make it sound good. But with ai I could get music that matches the quality of my other work.

So basically it’s security through obscurity, since once people know they can and will edit it out, especially those who want to use it for deception.