OK, its just a deer, but the future is clear. These things are going to start kill people left and right.

How many kids is Elon going to kill before we shut him down? Whats the number of children we’re going to allow Elon to murder every year?

Elon’s Death

For a brief moment, I was so excited… Rip deer.

We can only hope soon 🙏

Is there a longer video anywhere? Looking closely I have to wonder where the hell did that deer come from? There’s a car up ahead of the Tesla in the same lane, I presume quickly moved back in once it passed the deer? The deer didn’t spook or anything from that car?

This would have been hard for a human driver to avoid hitting, but I know the issue is the right equipment would have been better than human vision, which should be the goal. And it didn’t detect the impact either since it didn’t stop.

But I just think it’s peculiar that that deer just literally popped there without any sign of motion.

Ever hear the phrase “like a deer caught in headlights”? That’s what they do. They see oncoming headlights and just freeze.

It depends. If it’s on the side of the road it may do the opposite and jump in front of you. This one actually looked like it was going to start moving, but not a chance.

It’s the gap between where the deer is in the dark and the car in front that’s odd. Only thing I can figure is the person was in the other lane and darted over just after passing the deer.

The front car is probably further ahead than you think, and a deer can move onto the road quickly and freeze when looking at headlights or slow down if confused. I think in this case the deer was facing away and may not have even heard the vehicle approaching so it wasn’t trying to avoid danger.

I avoided a deer in a similar situation while driving last week, and the car ahead of us was closer than this clip. Just had to brake and change lanes.

The road is paved with squirrels who couldn’t make up their minds.

Sure and living in Wyoming I’ve seen that happen often enough right in front of me but the more I watch this video the more I want to know how that deer GOT there.

I can see a small shrub in the dark off the (right) side of the road but somehow you can’t see the deer enter the lane from either the right or left. The car in front of the Tesla is maybe 40 feet past the deer at the start of the video (watch the reflector posts) but somehow that car had no reaction to the deer standing in the middle of the lane?!

That’s why you flash your lights on and off at them, to get them to unfreeze before you get too close.

Deer will do that. They have absolutely no sense of self-preservation around cars.

That is because at a distance they freeze in case a predator hasn’t noticed them yet. Theey don’t bolt until they think an attack is imminent, and cars move to fast for them to react.

Is there a longer video anywhere? Looking closely I have to wonder where the hell did that deer come from?

I have the same question. If you watch the video closely the deer is located a few feet before the 2nd reflector post you see at the start of the video. At that point in time the car in front is maybe 20’ beyond the post which means they should have encountered the deer within the last 30-40 feet but there was no reaction visible.

You can also see both the left and right sides of the road at the reflector well before the deer is visible, you can even make out a small shrub off the road on the right, and but somehow can’t see the deer enter the road from either side?!

It’s like the thing just teleported into the middle of the lane.

The more I watch this the more suspicious I am that the video was edited.

OK, its just a deer…

Vegans have left the chat

What if I told you that this has happened with Kids and recorded with kid stand ins. https://www.youtube.com/watch?v=3mnG_Gbxf_w Not perfect evidence but the best you will find without signing a Tesla Non Disclosure.

don’t most cars have proximity and collision detectors now?

Not Tesla though, it relies on cameras only.

leave it to elon to take existing technology and make it worse to sell it as innovative high tech. fucking moron

To err is human; To really foul things up you need a computer.

*fawn things up

It doesn’t have to not kill people to be an improvement, it just has to kill less people than people do

True in a purely logical sense, but assigning liability is a huge issue for self-driving vehicles.

As long as there’s manual controls the driver is responsible as they’re supposed to be ready to take over

That doesn’t sound like a self-driving car to me.

Because it’s not, it’s a car with assisted driving, like all cars you can drive at the moment and with which, surprise surprise, you are held responsible if there’s an accident while it’s in assisted mode.

That’s a low bar when you consider how stringent airline safety is in comparison, and that kills way less people than driving does. If sensors can save people’s lives, then knowingly not including them for profit is intentionally malicious.

I roll my eyes at the dishonest bad faith takes people have in the comments about how people do the same thing behind the wheel. Like that’s going to make autopiloting self-driving cars an exception. Least a person can react, can slow down or do anything that an unthinking, going-by-the-pixels computer can’t do at a whim.

How come human drivers have more fatalities and injuries per mile driven?

Musk can die in a fire, but self driving car tech seems to be vastly safer than human drivers when you do apples to apples comparisons. It’s like wearing a seatbelt, you certainly don’t need to have one to go from point A to point B, but you’re definitely safer with it - even if you are giving up a little control. Like a seatbelt, you can always take it off.

deleted by creator

I honestly think it shouldn’t be called “self driving” or “autopilot” but should work more like the safety systems in Airbusses by simply not allowing the human to make a decision that would create a dangerous situation.

its just a deer

Deer are people too…

How many deer are on that road? It’s mowing down dozens of them in that video!

Deer often travel in herds so where there is one there are often more. In rural area you can go miles without seeing one, and then see 10 in a few hundred feet. There are deer in those miles you didn’t see them as well, but they happened to not be near the road then.

You just need to buy the North America Animal Recognition AI subscription and this wouldn’t be an issue plebs, it will stop for 28 out of 139 mammals!

Just a small clarification… Teslas only kill forward or backwards. Hardly ever has a car killed left or right 😂.

As much as I hate Elon, self-driving cars are the future and will be way safer than some idiot behind the wheel

Yeah, but Elon’s self-driving cars aren’t self-driving, nor are they necessarily as safe a good driver.

There are people out there who shouldn’t be able to drive, and in sane countries many of them don’t manage to get their licenses. But in the US for an example, apparently you can’t get anywhere without a car, so until the public transit situation is solved, drivers licenses need to be given out like candy :/ Exception being some cities with awesome public transit. The only one I’ve been to is NYC, where most people don’t really need to drive. I’d say the transit there is better than in my country.

And the worst part is that even once real SDCs exist and can be bought, not everyone can afford them. Or maybe they’ll be more like Uber or Bolt in that you hail one from an app and it picks you up - but then people in rural areas are still fucked without being able to drive themselves.

“As much as I hate elon…”

“I hate elon as much as the next guy, but…”

“Look, I’m no elon fan, but…”

I’m sure you all know, but to be clear, the above are the beginnings of sentences from people who don’t hate elon. They are sentences from people who like elon, but think you will hate them, or not consider their opinions, if they say out loud that they do, in fact, like elon.

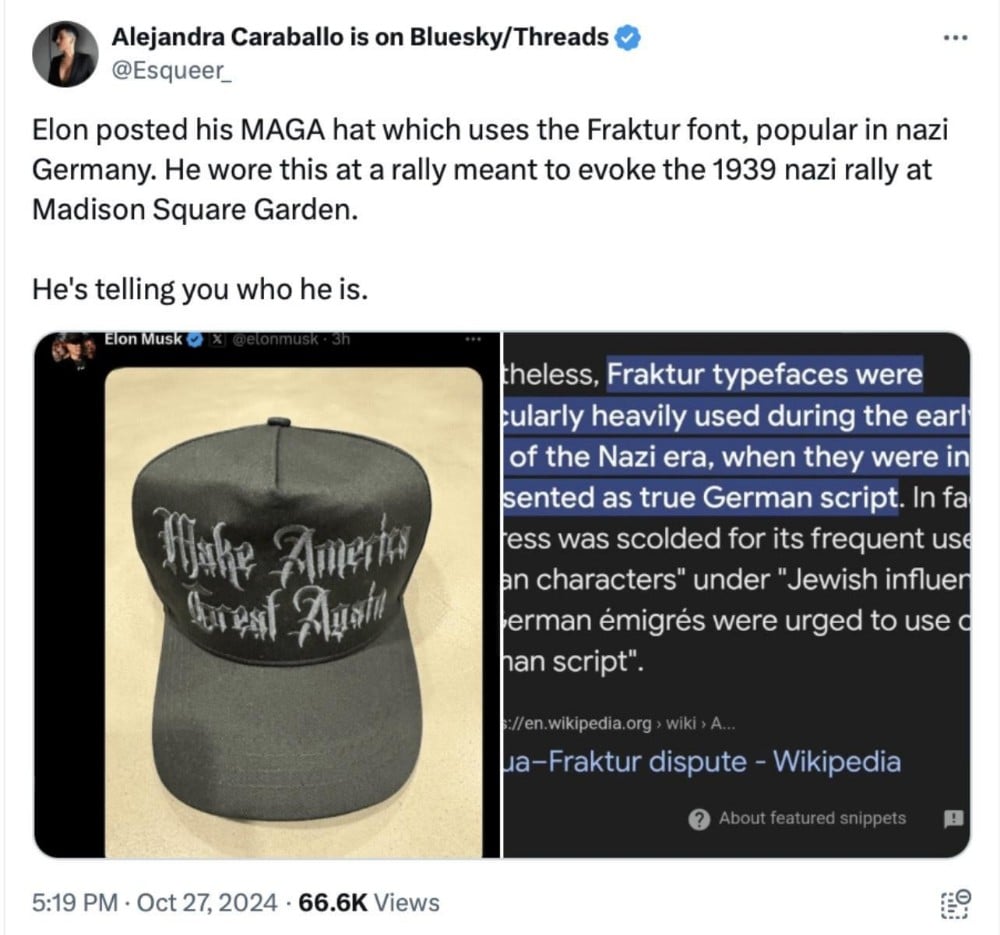

On a separate but related note, this is elon speaking at a hate-filled rally featuring a series of bigoted speakers, including himself. The rally very intentionally cosplayed an American Nazi rally that famously occurred at Madison Square garden in the 1930s. To emphasize how on the nose this all was, elon wore a specially made hat - a hat that very deliberately used an especially prominent font from the Nazi era. They are literally SCREAMING it in your face and tattooing it on their foreheads

elon has done nothing good or admirable with his life and elon will will not do anything good or admirable with his life. You can’t compartmentalize your opinion on this, he sucks, on the whole.

I hate Elon. There is no grey area in that statement.

A self-driving vehicle is not exclusive to Elon.

I prefer electric cars over gas ones, and think that at some point computer controlled transportation will be more reliable than the average human driver. These aren’t endorsements of any person or company.

Why are you wrapping this up so much with the one dude?

Its like saying that you can’t endorse online shopping unless you also like Bezos.

Driving is full of edge cases. Humans are also bad drivers who get edge cases wrong all the time.

The real question isn’t is Tesla better/worse in anyone in particular, but overall how does Tesla compare. If a Tesla is better in some situations and worse in others and so overall just as bad as a human I can accept it. Is Tesla is overall worse then they shouldn’t be driving at all (If they can identify those situations they can stop and make a human take over). If a Tesla is overall better then I’ll accept a few edge cases where they are worse.

Tesla claims overall they are better, but they may not be telling the truth. One would think regulators have data for the above - but they are not talking about it.

deleted by creator

Yeah there are edge cases in all directions.

When people want to say that someone is very rare they should say “corner case,” but this doesn’t seem to have made it out of QA lingo and into the popular lexicon.

Tesla claims overall they are better, but they may not be telling the truth. One would think regulators have data for the above - but they are not talking about it.

The agency is asking if other similar FSD crashes have occurred in reduced roadway visibility conditions, and if Tesla has updated or modified the FSD system in a way that may affect it in such conditions.

It sure seems like they aren’t being very forthcoming with their data between this and being threatened with fines last year for not providing the data. That makes me suspect they still aren’t telling the truth.

deleted by creator

It sure seems like they aren’t being very forthcoming with their data between this and being threatened with fines last year for not providing the data. That makes me suspect they still aren’t telling the truth.

I think their silence is very telling, just like their alleged crash test data on Cybertrucks. If your vehicles are that safe, why wouldn’t you be shoving that into every single selling point you have? Why wouldn’t that fact be plastered across every Gigafactory and blaring from every Tesla that drives past on the road? If Tesla’s FSD is that good, and Cybertrucks are that safe, why are they hiding those facts?

If the cybertruck is so safe in crashes they would be begging third parties to test it so they could smugly lord their 3rd party verified crash test data over everyone else.

Bu they don’t because they know it would be a repeat of smashing the bulletproof window on stage.

Humans are also bad drivers who get edge cases wrong all the time.

It would be so awesome if humans only got the edge cases wrong.

I’ve been able to get demos of autopilot in one of my friend’s cars, and I’ll always remember autopilot correctly stopping at a red light, followed by someone in the next lane over blowing right through it several seconds later at full speed.

Unfortunately “better than the worst human driver” is a bar we passed a long time ago. From recent demos I’d say we’re getting close to the “average driver”, at least for clear visibility conditions, but I don’t think even that’s enough to have actually driverless cars driving around.

There were over 9M car crashes with almost 40k deaths in the US in 2020, and that would be insane to just decide that’s acceptable for self driving cars as well. No company is going to want that blood on their hands.

Being safer than humans is a decent starting point, but safety should be maximized to the best of a machine’s capability, even if it means adding a sensor or two. Keeping screws loose on a Boeing airplane still makes the plane safer than driving, so Boeing should not be made to take responsibility.

Given that they market it as “supervised”, the question only has to be “are humans safer when using this tool than when not using it?”

One of the cool things I’ve noticed since recent updates, is the car giving a nudge to help me keep centered, even when I’m not using autopilot

If a Tesla is better in some situations and worse in others and so overall just as bad as a human I can accept it.

This idea has a serious problem: THE BUG.

We hear this idea very often, but you are disregarding the problem of a programmed solution: it makes it’s mistakes all the time. Infinitely.

Humans are also bad drivers who get edge cases wrong all the time.

So this is not exactly true.

Humans can learn, and humans can tell when they made an error, and try to do it differently next time. And all humans are different. They make different mistakes. This tiny fact is very important. It secures our survival.

The car does not know when it made a mistake, for example, when it killed a deer, or a person, and crashed it’s windshield and bent lot’s of it’s metal. It does not learn from it.

It would do it again and again.

And all the others would do exactly the same, because they run the same software with the same bug.

Now imagine 250 million people having 250 million Teslas, and then comes the day when each one of them decides to kill a person…

Tesla can detect a crash and send the last minute of data back so all cars learn from is. I don’t know if they do but they can.

I don’t know if they do but they can.

"Today on Oct 30 I ran into a deer but I was too dumb to see it, not even see any obstacle at all. I just did nothing. My driver had to do it all.

Grrrrrr.

Everybody please learn from that, wise up and get yourself some LIDAR!"

“there was no Danger to my Chasis”

- Vehicle needed lidar

- Vehicle should have a collision detection indicator for anomalous collisions and random mechanical problems